k8s 核心概念及操作命令

namespace(命名空间,简称 ns)

k8s 资源创建的两种方式:使用命令行创建、使用 yaml 文件创建

什么是 ns

在 k8s 中,ns 是一种用于对集群资源进行逻辑分组和隔离的机制。它允许将 k8s 集群划分为多个虚拟集群,一个 ns 可以看作是一个虚拟的集群,每个 ns 内的资源相互隔离,不同 ns 之间的资源不会冲突,它将物理集群划分为多个逻辑部分,不同的业务(web、数据库、消息中间件)可以部署在不同的命名空间,实现业务的隔离,并且可以对其进行资源配额,限制 cpu、内存等资源的使用,名称空间用来隔离资源,是一种标识机制,不会隔离网络。

ns 的主要作用如下:

- 逻辑分组:ns 可以将集群中的资源进行逻辑分组,使得不同的应用程序或团队可以在同一个集群中独立管理和操作自己的资源,避免资源的混淆和冲突。

- 资源隔离:不同的 ns 之间的资源是相互隔离的,即使具有相同名称的资源也可以在不同的 ns 中存在。这样可以确保不同应用程序或团队之间的资源不会相互干扰,提高了安全性和稳定性。

- 访问控制:ns 提供了基于 ns 的访问控制机制,可以通过角色和角色绑定来控制不同 ns 内的资源访问权限,实现细粒度的权限控制。

- 资源配额:可以为每个 ns 设置资源配额,限制该 ns 内的资源使用量,避免某个应用程序或团队过度占用资源,导致其他应用程序受到影响。

- 监控和日志:k8s 可以对不同 ns 中的资源进行监控和日志记录,方便对集群中不同 ns 的资源进行分析和故障排查。

通过使用 ns,k8s 提供了一种灵活的资源隔离和管理机制,可以将集群划分为多个虚拟集群,实现资源的逻辑分组和隔离,提高了集群的安全性、可管理性和可扩展性。

ns 适合用于隔离不同用户创建的资源,每一个添加到 k8s 集群的工作负载必须放在一个命名空间中,不指定 ns 默认都在 default 下面。

初始 ns

k8s 启动时会创建四个初始名字空间:

-

default:k8s 包含这个名字空间,以便于你无需创建新的名字空间即可开始使用新集群。 -

kube-node-lease:该名字空间包含用于与各个节点关联的 Lease(租约)对象。 节点租约允许 kubelet 发送心跳, 由此控制面能够检测到节点故障。 -

kube-public:所有的客户端(包括未经身份验证的客户端)都可以读取该名字空间。 该名字空间主要预留为集群使用,以便某些资源需要在整个集群中可见可读。 该名字空间的公共属性只是一种约定而非要求。 -

kube-system:该名字空间用于 k8s 系统创建的对象。

ns 基本命令

kubectl get ns

[root@k8s-master ~]# kubectl get ns

NAME STATUS AGE

default Active 3h18m

kube-node-lease Active 3h18m

kube-public Active 3h18m

kube-system Active 3h18m

kubernetes-dashboard Active 128m

kubectl create ns ns名称

[root@k8s-master ~]# kubectl create ns yigongsui

namespace/yigongsui created

[root@k8s-master ~]# kubectl get ns

NAME STATUS AGE

default Active 5h25m

kube-node-lease Active 5h25m

kube-public Active 5h25m

kube-system Active 5h25m

kubernetes-dashboard Active 4h16m

yigongsui Active 14s

kubectl delete ns ns名称

[root@k8s-master ~]# kubectl delete ns yigongsui

namespace "yigongsui" deleted

[root@k8s-master ~]# kubectl get ns

NAME STATUS AGE

default Active 5h33m

kube-node-lease Active 5h33m

kube-public Active 5h33m

kube-system Active 5h33m

kubernetes-dashboard Active 4h24m

编辑文件my-ns.yaml,名字可以任取,必须是 yaml 文件

apiVersion: v1

kind: Namespace

metadata:

name: my-ns

创建命令有两种:

kubectl apply -f my-ns.yaml

kubectl create -f my-ns.yaml

区别:

apply:创建或更新,如果 ns 存在且发生了变化,会进行更新create:只能用于创建,如果 ns 存在,会报错

# 编辑 yaml 文件

[root@k8s-master k8s]# vim my-ns.yaml

# yaml 文件内容

[root@k8s-master k8s]# cat my-ns.yaml

apiVersion: v1

kind: Namespace

metadata:

name: my-ns

# yaml 文件创建 ns

[root@k8s-master k8s]# kubectl apply -f my-ns.yaml

namespace/my-ns created

[root@k8s-master k8s]# kubectl get ns

NAME STATUS AGE

default Active 5h39m

kube-node-lease Active 5h39m

kube-public Active 5h39m

kube-system Active 5h39m

kubernetes-dashboard Active 4h30m

my-ns Active 10s

[root@k8s-master k8s]# kubectl create -f my-ns.yaml

Error from server (AlreadyExists): error when creating "my-ns.yaml": namespaces "my-ns" already exists

yaml 资源配置清单,是声明式管理 k8s 资源

kubectl delete -f my-ns.yaml

[root@k8s-master k8s]# kubectl delete -f my-ns.yaml

namespace "my-ns" deleted

kubectl get ns my-ns -o yaml

[root@k8s-master k8s]# kubectl get ns my-ns -o yaml

apiVersion: v1

kind: Namespace

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Namespace","metadata":{"annotations":{},"name":"my-ns"}}

creationTimestamp: "2024-03-24T14:12:23Z"

labels:

kubernetes.io/metadata.name: my-ns

name: my-ns

resourceVersion: "33348"

uid: 36e5f296-7213-46b3-9a89-fb21354fe151

spec:

finalizers:

- kubernetes

status:

phase: Active

apiVersion: api版本标签信息

kind:资源类型

metadata:资源元数据信息

spec: 属性

apiVersion: apps/v1 # 指定api版本标签

kind: Deployment # 定义资源的类型/角色,deployment为副本控制器,此处资源类型可以是Deployment、Job、Ingress、Service等

metadata: # 定义资源的元数据信息,比如资源的名称、namespace、标签等信息

name: nginx-deployment # 定义资源的名称,在同一个namespace空间中必须是唯一的

namespace: kube-public # 定义资源所在命名空间

labels: # 定义资源标签

app: nginx

name: test01

spec: # 定义资源需要的参数属性,诸如是否在容器失败时重新启动容器的属性

replicas: 3 # 副本数

selector: # 定义标签选择器

matchLabels: # 定义匹配标签

app: nginx # 需与.spec.template.metadata.labels 定义的标签保持一致

template: # 定义业务模板,如果有多个副本,所有副本的属性会按照模板的相关配置进行匹配

metadata:

labels: # 定义Pod副本将使用的标签,需与.spec.selector.matchLabels 定义的标签保持一致

app: nginx

spec:

containers: # 定义容器属性

- name: nignx # 定义一个容器名,一个 - name: 定义一个容器

image: nginx:1.21 # 定义容器使用的镜像以及版本

ports:

- name: http

containerPort: 80 # 定义容器的对外的端口

- name: https

containerPort: 443

pod

什么是 pod

在 k8s 中,pod 是最小的可部署和可管理的计算单元。它是 k8s 中应用的最小单位,也是在 k8s 上运行容器化应用的资源对象,其他的资源对象都是用来支撑或者扩展 Pod 对象功能的,用于托管应用程序的运行实例。

pod 是一个逻辑主机,它由一个或多个容器组成,这些容器共享同一个网络命名空间、存储卷和其他依赖资源。这些容器通过共享相同的资源,可以更方便地进行通信、共享数据和协同工作。

一个 pod 可以包含一个或多个容器,这些容器一起运行在同一个主机上,并共享同一个 IP 地址和端口空间。它们可以通过 localhost 直接通信,无需进行网络通信。这使得容器之间的通信更加高效和简便。

pod 还提供了一些额外的功能,例如:

- 共享存储卷:pod 中的容器可以共享同一个存储卷,从而实现数据的共享和持久化。

- 同一命名空间:pod 中的容器共享同一个网络命名空间,它们可以通过 localhost 直接通信。

- 生命周期管理:pod 可以定义容器的启动顺序、重启策略和终止行为。

- 资源调度:pod 可以作为 k8s 调度器的最小调度单位,用于将容器放置在集群中的不同节点上。

需要注意的是,pod 是临时性的,它可能会被创建、删除或重新创建。因此,pod 不具备持久性和可靠性。如果需要实现高可用性和容错性,可以使用 replicaSet、deployment 等 k8s 资源来管理和控制 pod 的副本。

总之,pod 是 k8s 中的基本概念,用于托管应用程序的运行实例。它由一个或多个容器组成,共享同一个网络命名空间和存储卷,提供了容器间通信、存储共享和生命周期管理等功能。

了解:

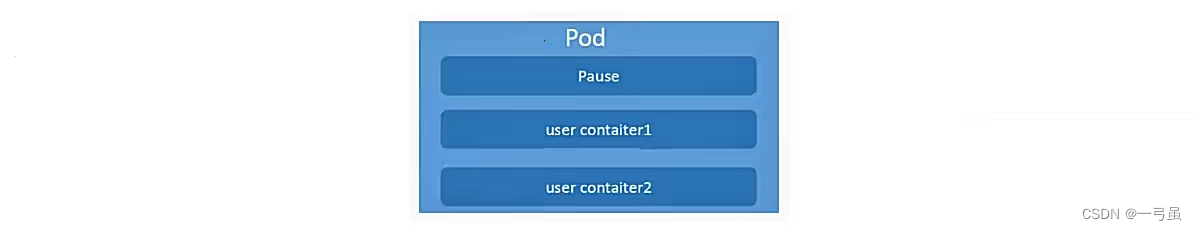

每一个 pod 都有一个特殊的被称为”根容器“的 pause容器。pause 容器对应的镜像属于 k8s 平台的一部分.

k8s 不会直接处理容器,而是 pod。

pod 是多进程设计,运用多个应用程序,也就是一个 pod 里面有多个容器,而一个容器里面运行一个应用程序。

pod 基本命令

# kubectl run pod名 --image=镜像名

kubectl run my-nginx --image=nginx

[root@k8s-master k8s]# kubectl run my-nginx --image=nginx

pod/my-nginx created

kubectl get pod

参数:

-A:等价于--all-namespaces,获取所有 ns 下的 pod 信息-n:等价于--namespace=<namespace>,获取指定 ns 下的信息

[root@k8s-master k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

my-nginx 1/1 Running 0 88s

# kubectl describe pod pod名

kubectl describe pod my-nginx

[root@k8s-master k8s]# kubectl describe pod my-nginx

Name: my-nginx

Namespace: default

Priority: 0

Service Account: default

Node: k8s-node1/192.168.0.2

Start Time: Sun, 24 Mar 2024 23:02:15 +0800

Labels: run=my-nginx

Annotations: cni.projectcalico.org/containerID: c16d009c2f2fcde906fd693d565d13086c4c2b5ad268d92b0cab4eff4cef1059

cni.projectcalico.org/podIP: 192.169.36.67/32

cni.projectcalico.org/podIPs: 192.169.36.67/32

Status: Running

IP: 192.169.36.67

IPs:

IP: 192.169.36.67

Containers:

my-nginx:

Container ID: docker://97119d84370669ae58a833ec2dbd094ba8ed5891abf884b771366beb8875bd8b

Image: nginx

Image ID: docker-pullable://nginx@sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31

Port: <none>

Host Port: <none>

State: Running

Started: Sun, 24 Mar 2024 23:02:26 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-lh4lf (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-lh4lf:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 5m30s default-scheduler Successfully assigned default/my-nginx to k8s-node1

Normal Pulling 5m30s kubelet Pulling image "nginx"

Normal Pulled 5m20s kubelet Successfully pulled image "nginx" in 9.882s (9.882s including waiting)

Normal Created 5m19s kubelet Created container my-nginx

Normal Started 5m19s kubelet Started container my-nginx

# kubectl logs pod名

kubectl logs my-nginx

[root@k8s-master k8s]# kubectl logs my-nginx

/docker-entrypoint.sh: /docker-entrypoint.d/ is not empty, will attempt to perform configuration

/docker-entrypoint.sh: Looking for shell scripts in /docker-entrypoint.d/

/docker-entrypoint.sh: Launching /docker-entrypoint.d/10-listen-on-ipv6-by-default.sh

10-listen-on-ipv6-by-default.sh: info: Getting the checksum of /etc/nginx/conf.d/default.conf

10-listen-on-ipv6-by-default.sh: info: Enabled listen on IPv6 in /etc/nginx/conf.d/default.conf

/docker-entrypoint.sh: Launching /docker-entrypoint.d/20-envsubst-on-templates.sh

/docker-entrypoint.sh: Launching /docker-entrypoint.d/30-tune-worker-processes.sh

/docker-entrypoint.sh: Configuration complete; ready for start up

2024/03/24 15:02:26 [notice] 1#1: using the "epoll" event method

2024/03/24 15:02:26 [notice] 1#1: nginx/1.21.5

2024/03/24 15:02:26 [notice] 1#1: built by gcc 10.2.1 20210110 (Debian 10.2.1-6)

2024/03/24 15:02:26 [notice] 1#1: OS: Linux 3.10.0-1160.108.1.el7.x86_64

2024/03/24 15:02:26 [notice] 1#1: getrlimit(RLIMIT_NOFILE): 1048576:1048576

2024/03/24 15:02:26 [notice] 1#1: start worker processes

2024/03/24 15:02:26 [notice] 1#1: start worker process 31

2024/03/24 15:02:26 [notice] 1#1: start worker process 32

2024/03/24 15:02:26 [notice] 1#1: start worker process 33

2024/03/24 15:02:26 [notice] 1#1: start worker process 34

# kubectl delete pod pod名

kubectl delete pod my-nginx

[root@k8s-master k8s]# kubectl delete pod my-nginx

pod "my-nginx" deleted

[root@k8s-master k8s]# kubectl get pod

No resources found in default namespace.

编辑文件my-pod.yaml,内容如下:

apiVersion: v1

kind: Pod

metadata:

name: my-nginx

labels:

run: my-nginx

spec:

containers:

- image: nginx

name: nginx01

这是创建一个名为 my-nginx 的 pod,并在其中运行名为 nginx01 的容器(镜像为 nginx)。

编辑完成后执行以下命令:

kubectl apply -f my-pod.yaml

[root@k8s-master k8s]# vim my-pod.yaml

[root@k8s-master k8s]# cat my-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: my-nginx

labels:

run: my-nginx

spec:

containers:

- image: nginx

name: nginx01

[root@k8s-master k8s]# kubectl apply -f my-pod.yaml

pod/my-nginx created

[root@k8s-master k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

my-nginx 1/1 Running 0 2m

[root@k8s-master k8s]# kubectl describe pod my-nginx

Name: my-nginx

Namespace: default

Priority: 0

Service Account: default

Node: k8s-node1/192.168.0.2

Start Time: Sun, 24 Mar 2024 23:26:05 +0800

Labels: run=my-nginx

Annotations: cni.projectcalico.org/containerID: af662227a2724395f76f3459401c0a1293d6453f9501a3198ad276ff49f93223

cni.projectcalico.org/podIP: 192.169.36.68/32

cni.projectcalico.org/podIPs: 192.169.36.68/32

Status: Running

IP: 192.169.36.68

IPs:

IP: 192.169.36.68

Containers:

nginx01:

Container ID: docker://4ea31b6af6e594aa9c502133c9c5034ce15d6c96a1c5d1ccb86f7d2189b147d1

Image: nginx

Image ID: docker-pullable://nginx@sha256:0d17b565c37bcbd895e9d92315a05c1c3c9a29f762b011a10c54a66cd53c9b31

Port: <none>

Host Port: <none>

State: Running

Started: Sun, 24 Mar 2024 23:26:06 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-khgxd (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-khgxd:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m49s default-scheduler Successfully assigned default/my-nginx to k8s-node1

Normal Pulling 2m49s kubelet Pulling image "nginx"

Normal Pulled 2m48s kubelet Successfully pulled image "nginx" in 237ms (237ms including waiting)

Normal Created 2m48s kubelet Created container nginx01

Normal Started 2m48s kubelet Started container nginx01

# 在 k8s 中每一个 pod 都会分配一个 ip

# 执行 kubectl get pod 命令使用-o wide参数展示 pod 更多的列

kubectl get pod -o wide

[root@k8s-master k8s]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-nginx 1/1 Running 0 12m 192.169.36.68 k8s-node1 <none> <none>

根据 ip 地址就可以访问 my-nginx 下的容器 nginx01 了

# 默认为http协议,也就是80端口,nginx默认端口就是80

curl 192.169.36.68 或 curl 192.169.36.68:80

[root@k8s-master k8s]# curl 192.169.36.68

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

# kubectl exec -it pod名 /bin/bash

kubectl exec -it nginx01 /bin/bash

参数:

c:指定要进入哪个容器

[root@k8s-master k8s]# kubectl exec -it my-nginx -c nginx01 /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@my-nginx:/# cd /usr/share/nginx/html/

root@my-nginx:/usr/share/nginx/html# ls

50x.html index.html

root@my-nginx:/usr/share/nginx/html# echo "hello my-nginx" > index.html

root@my-nginx:/usr/share/nginx/html# exit

exit

[root@k8s-master k8s]# curl 192.169.36.68

hello my-nginx

编辑文件my-pod2.yaml

apiVersion: v1

kind: Pod

metadata:

name: my-pod

labels:

run: my-pod

spec:

containers:

- name: nginx1

image: nginx

- name: tomcat1

image: tomcat:8.5.92

[root@k8s-master k8s]# kubectl apply -f my-pod2.yaml

pod/my-pod created

[root@k8s-master k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

my-nginx 1/1 Running 0 28m

my-pod 2/2 Running 0 2m41s

访问 pod 中的两个容器 nginx1 和 tomcat1

# 查看 pod 的ip

[root@k8s-master k8s]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-nginx 1/1 Running 0 29m 192.169.36.68 k8s-node1 <none> <none>

my-pod 2/2 Running 0 3m53s 192.169.36.69 k8s-node1 <none> <none>

# 访问 nginx1

[root@k8s-master k8s]# curl 192.169.36.69:80

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

# 访问 tomcat1

[root@k8s-master k8s]# curl 192.169.36.69:8080

<!doctype html><html lang="en"><head><title>HTTP Status 404 – Not Found</title><style type="text/css">body {font-family:Tahoma,Arial,sans-serif;} h1, h2, h3, b {color:white;background-color:#525D76;} h1 {font-size:22px;} h2 {font-size:16px;} h3 {font-size:14px;} p {font-size:12px;} a {color:black;} .line {height:1px;background-color:#525D76;border:none;}</style></head><body><h1>HTTP Status 404 – Not Found</h1><hr class="line" /><p><b>Type</b> Status Report</p><p><b>Description</b> The origin server did not find a current representation for the target resource or is not willing to disclose that one exists.</p><hr class="line" /><h3>Apache Tomcat/8.5.92</h3></body></html>

k8s 架构思想:没有什么是加一层解决不了的

pod创建底部流程

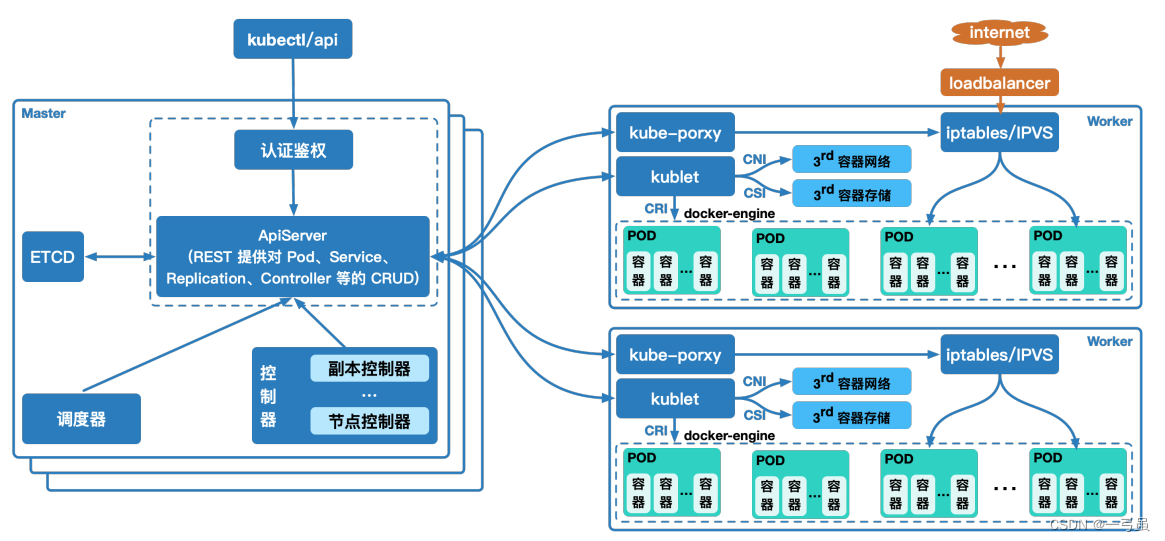

k8s 中包含了众多组件,通过 watch 的机制进行每个组件的协作,每个组件之间的设计实现了解耦其工作流程如下图所示:

以创建 pod 为例:

- 集群管理员或者开发人员通过 kubectl 或者客户端等构建 REST 请求,经由 apiserver 进行鉴权认证(使用 kubeconfig 文件),验证准入信息后将请求数据(metadata)写入 etcd 中;

- ControllerManager(控制器组件)通过 watch 机制发现创建 pod 的信息,并将整合信息通过 apiservre 写入 etcd 中,此时 pod 处于可调度状态

- Scheduler(调度器组件)基于 watch 机制获取可调度 pod 列表信息,通过调度算法(过滤或打分)为待调度 pod 选择最适合的节点,并将创建pod 信息写入 etcd 中,创建请求发送给节点上的 kubelet;

- kubelet 收到 pod 创建请求后,调用 CNI 接口为 pod 创建网络环境,调用 CRI 接口创建 pod 内部容器,调用 CSI 接口对 pod 进行存储卷的挂载;

- 等待 pod 内部运行环境创建完成,基于探针或者健康检查监测业务运行容器启动状态,启动完成后 pod 处于 running 状态,pod 进入运行阶段。

deployment

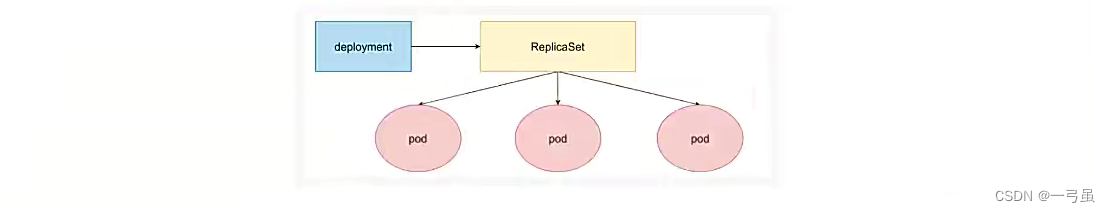

为了更好地解决服务编排的问题,k8s 在 V1.2 版本开始,引入了 deployment 控制器,值得一提的是,这种控制器并不直接管理 pod,

而是通过管理 replicaset(副本集)来间接管理 pod,即:deployment 管理 replicaset,replicaset 管理 pod。

所以 deployment 比 replicaset 的功能更强大。

最小单位是 pod=>服务。

deployment 部署,操作我的 pod。

通过 deployment,使 pod 拥有多副本、自愈、扩缩容等能力。

什么是 deployment

在 k8s 中,deployment 是一种用于定义和管理 pod 的资源对象(并不直接管理 pod)。deployment 提供了一种声明式的方式来描述所需的应用程序副本数量、容器镜像和其他相关配置,以及在应用程序更新或扩缩容时的自动化管理。

deployment 的主要功能包括:

- 创建和管理 pod:deployment 使用 pod 模板定义了所需的容器镜像、环境变量、卷挂载等配置,并根据指定的副本数量自动创建和管理 pod 实例。

- 滚动更新:deployment 支持滚动更新应用程序,即在不中断服务的情况下逐步替换旧的 pod 实例为新的 pod 实例。可以通过指定更新策略、最大不可用副本数等参数来控制滚动更新的行为。

- 扩缩容:deployment 可以根据 CPU 使用率、内存使用率等指标自动扩缩容应用程序副本数量,以适应负载的变化。

- 健康检查和自愈能力:deployment 可以定义容器的健康检查机制,如果某个 pod 实例失败或不健康,deployment 会自动重启或替换该实例,以保证应用程序的高可用性。

- 版本控制和回滚:deployment 允许您在进行应用程序更新时记录应用程序的版本,并支持回滚到之前的版本,以便在出现问题时进行恢复。

通过使用 deployment,您可以轻松地管理和控制应用程序的生命周期,并实现应用程序的自动化部署、更新和扩缩容。

deployment 基本命令

首先清除所有 pod

[root@k8s-master k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

my-nginx 1/1 Running 0 50m

my-pod 2/2 Running 0 24m

# 参数 -n 指定 ns,不指定则删除所有 ns 下的同名 pod

[root@k8s-master k8s]# kubectl delete pod my-nginx my-pod -n default

pod "my-nginx" deleted

pod "my-pod" deleted

[root@k8s-master k8s]# kubectl get pod

No resources found in default namespace.

# kubectl create deployment pod名 --image=镜像名

kubectl create deployment tomcat --image=tomcat:8.5.92

这里我们对比一下,使用 deployment 创建的 pod 与使用 run 命令创建的 pod 有什么区别

# deployment 创建

[root@k8s-master k8s]# kubectl create deployment tomcat --image=tomcat:8.5.92

deployment.apps/tomcat created

# run 创建

[root@k8s-master k8s]# kubectl run nginx --image=nginx

pod/nginx created

[root@k8s-master k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 9s

tomcat-d7f8f49fc-nt5xg 1/1 Running 0 22s

我们惊奇的发现,使用 deployment 创建的 pod,它的名字的后面加了一串随机生成的 string

接下来我们删除这两个 pod

[root@k8s-master k8s]# kubectl delete pod nginx

pod "nginx" deleted

[root@k8s-master k8s]# kubectl delete pod tomcat-d7f8f49fc-nt5xg

pod "tomcat-d7f8f49fc-nt5xg" deleted

[root@k8s-master k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

tomcat-d7f8f49fc-f54r7 1/1 Running 0 4s

其中,名为 nginx 的 pod 是正常删除了,名为 tomcat-d7f8f49fc-nt5xg 的 pod 删除之后,又重新生成了一个新的 pod,名字还是 tomcat + 一串随机生成的 string,与之前不同

这是因为通过 deployment 部署的 pod,拥有极强的自愈能力,只要 deployment 还在,就会无限创建 pod

如果想要删除这个 pod,就要先删除 deployment

kubectl get deploy

参数:

-A:查看所有 ns 下的 deployment-n:查看指定 ns 下的 deployment

[root@k8s-master k8s]# kubectl get deploy -A

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

default tomcat 1/1 1 1 14m

kube-system calico-kube-controllers 1/1 1 1 7h54m

kube-system coredns 2/2 2 2 8h

kubernetes-dashboard dashboard-metrics-scraper 1/1 1 1 7h18m

kubernetes-dashboard kubernetes-dashboard 1/1 1 1 7h18m

[root@k8s-master k8s]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

tomcat 1/1 1 1 15m

[root@k8s-master k8s]# kubectl get deploy -n default

NAME READY UP-TO-DATE AVAILABLE AGE

tomcat 1/1 1 1 15m

kubectl delete deploy tomcat -n default

[root@k8s-master k8s]# kubectl delete deploy tomcat -n default

deployment.apps "tomcat" deleted

[root@k8s-master k8s]# kubectl get pod

No resources found in default namespace.

可以看到 pod 也被删除了

创建 deployment 时可以指定创建 pod 的个数,使用--replicas

# --replicas=3 副本数量

kubectl create deployment nginx-deploy --image=nginx --replicas=3

[root@k8s-master ~]# kubectl create deployment nginx-deploy --image=nginx --replicas=3

deployment.apps/nginx-deploy created

[root@k8s-master ~]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 3/3 3 3 43s

# 启动了3个 pod

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deploy-d845cc945-8xml2 1/1 Running 0 55s

nginx-deploy-d845cc945-bwjmm 1/1 Running 0 55s

nginx-deploy-d845cc945-rwbs5 1/1 Running 0 55s

# 每一个 pod 都对应了一个 ip,自动进行分布式部署

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-d845cc945-8xml2 1/1 Running 0 94s 192.169.36.74 k8s-node1 <none> <none>

nginx-deploy-d845cc945-bwjmm 1/1 Running 0 94s 192.169.169.135 k8s-node2 <none> <none>

nginx-deploy-d845cc945-rwbs5 1/1 Running 0 94s 192.169.36.73 k8s-node1 <none> <none>

从这3个 pod 中我们可以发现,名字是由 deployment 名字(nginx-deploy)+ 一段固定的字符串(d845cc945)+ 随机生成的字符串(3个都不一样,第一个是 8xml2)构成,现在我们思考这一段固定的字符串是干什么的?

我们在介绍 deployment 时,一开始就说了 deployment 是管理 replicaset 的而不是管理 pod 的,这一段固定的字符串就是 deployment 与 replicaset 进行映射的标签

k8s 就是使用这个来保证我们的 deployment 可以映射到唯一的 replicaset。

有关 replicaset 的概念后面再详解,现在了解即可

# 查看 ns 下的所有副本集

# 可以看到,replicaset 的标签可以找到这个 deployment 部署的所有 pod,pod 里面也有对应的标签,pod-template-hash 是一致的

[root@k8s-master ~]# kubectl get replicaset --show-labels

NAME DESIRED CURRENT READY AGE LABELS

nginx-deploy-d845cc945 3 3 3 12m app=nginx-deploy,pod-template-hash=d845cc945

[root@k8s-master ~]# kubectl get pod --show-labels

NAME READY STATUS RESTARTS AGE LABELS

nginx-deploy-d845cc945-8xml2 1/1 Running 0 13m app=nginx-deploy,pod-template-hash=d845cc945

nginx-deploy-d845cc945-bwjmm 1/1 Running 0 13m app=nginx-deploy,pod-template-hash=d845cc945

nginx-deploy-d845cc945-rwbs5 1/1 Running 0 13m app=nginx-deploy,pod-template-hash=d845cc945

编辑文件my-deploy.yaml:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deploy

labels:

run: nginx-deploy

spec:

replicas: 3

selector:

matchLabels:

app: nginx-deploy

template:

metadata:

labels:

app: nginx-deploy

spec:

containers:

- image: nginx

name: nginx

创建一个名为 nginx-deploy 的deployment,replicaset 有3个 pod,每一个 pod 下有一个名为 nginx 的容器

# 先删除旧的 deployment

[root@k8s-master k8s]# kubectl delete deploy nginx-deploy

deployment.apps "nginx-deploy" deleted

[root@k8s-master k8s]# kubectl get deploy

No resources found in default namespace.

# 执行 yaml 文件,创建 deployment

[root@k8s-master k8s]# kubectl apply -f my-deploy.yaml

deployment.apps/nginx-deploy created

[root@k8s-master k8s]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 3/3 3 3 2m19s

[root@k8s-master k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deploy-d845cc945-7mtfk 1/1 Running 0 2m23s

nginx-deploy-d845cc945-jbbhz 1/1 Running 0 2m23s

nginx-deploy-d845cc945-ntx8m 1/1 Running 0 2m23s

[root@k8s-master k8s]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deploy-d845cc945-7mtfk 1/1 Running 0 2m34s 192.169.169.136 k8s-node2 <none> <none>

nginx-deploy-d845cc945-jbbhz 1/1 Running 0 2m34s 192.169.36.76 k8s-node1 <none> <none>

nginx-deploy-d845cc945-ntx8m 1/1 Running 0 2m34s 192.169.36.75 k8s-node1 <none> <none>

[root@k8s-master k8s]# curl 192.169.169.136:80

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

yaml 格式精进:

apiVersion: apps/v1 # 指定deployment的api版本

kind: Deployment # 指定创建资源的角色/类型

metadata: # 指定Deployment的元数据

name: nginx # 创建名为nginx的Deployment

labels: # 指定Deployment的标签(可自定义多个),这里的标签不需要与任何地方的标签匹配,根据实际场景随意自定义即可

app: demo

spec: # Deployment的资源规格

replicas: 2 # Deployment将创建2个Pod副本(默认为 1)

selector: # 匹配标签选择器,定义Deployment如何查找要管理的Pod,因此这里必须与Pod的template模板中定义的标签保持一致

matchLabels:

app: demo

template: # 指定Pod模板

metadata: # 指定Pod的元数据

labels: # 指定Pod的标签(可自定义多个)

app: demo

spec: # Pod的资源规格

containers: # 指定Pod运行的容器信息

- name: nginx # 指定Pod中运行的容器名

image: nginx:1.20.0 # 指定Pod中运行的容器镜像与版本(不指定镜像版本号则默认为latest)

ports:

- containerPort: 80 # 指定容器的端口(即Nginx默认端口)

k8s 对应的资源api标签信息,如果你写的 apiVersion 不存在,k8s无法运行

[root@k8s-master k8s]# kubectl api-versions

admissionregistration.k8s.io/v1

apiextensions.k8s.io/v1

apiregistration.k8s.io/v1

apps/v1

authentication.k8s.io/v1

authorization.k8s.io/v1

autoscaling/v1

autoscaling/v2

batch/v1

certificates.k8s.io/v1

coordination.k8s.io/v1

crd.projectcalico.org/v1

discovery.k8s.io/v1

events.k8s.io/v1

flowcontrol.apiserver.k8s.io/v1beta2

flowcontrol.apiserver.k8s.io/v1beta3

networking.k8s.io/v1

node.k8s.io/v1

policy/v1

rbac.authorization.k8s.io/v1

scheduling.k8s.io/v1

storage.k8s.io/v1

v1

需求:系统运营过程中,流量越来越大,扛不住了,需要增加部署。或者流量减少,需要减少部署降低成本。

扩缩容命令:重新指定副本数

# kubectl scale deployment deployment名 --replicas=副本数

# 使用 -n 指定 ns,默认为 default

# 缩容就是指定的副本数比原来少

kubectl scale deploy nginx-deploy --replicas=5

[root@k8s-master k8s]# kubectl scale deploy nginx-deploy --replicas=5

deployment.apps/nginx-deploy scaled

[root@k8s-master k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-deploy-d845cc945-45bn6 1/1 Running 0 36s

nginx-deploy-d845cc945-75d5h 1/1 Running 0 36s

nginx-deploy-d845cc945-7mtfk 1/1 Running 0 15m

nginx-deploy-d845cc945-cnkrk 1/1 Running 0 2m22s

nginx-deploy-d845cc945-lxhgr 1/1 Running 0 36s

如果运行中,一个节点宕机了,k8s 会自动再其他节点自动重启拉起,保证副本数量可用。

挂掉一个节点,该节点上的服务就会异常

等待 5 分钟后,会自动在其他可用 work 节点进行创建并运行,之所以要等待5分钟,这是因为 k8s 的 Taint(污点)与 Toleration(容忍)机制所造成。

此服务中断时间 = 停机等待5分钟时间 + 重建时间 + 服务启动时间 + readiness 探针检测正常时间

假设:node2 活过来了,原来的 pod 还能重新回来吗?

答案是不能,服务数量严格安装 deployment 的副本数量,5个副本全部在 node1 节点上,即使 node2 活过来了,也不会重新构建

首先我们对 pod 进行实时监控

kubectl get pod -w

[root@k8s-master k8s]# kubectl get pod -w

NAME READY STATUS RESTARTS AGE

nginx-deploy-d845cc945-45bn6 1/1 Running 0 19m

nginx-deploy-d845cc945-75d5h 1/1 Running 0 19m

nginx-deploy-d845cc945-7mtfk 1/1 Running 0 34m

nginx-deploy-d845cc945-cnkrk 1/1 Running 0 21m

nginx-deploy-d845cc945-lxhgr 1/1 Running 0 19m

打开一个新的窗口,升级 deployment 的 nginx 镜像,查看监控窗口输出

# 升级镜像版本到 nginx:1.19.2

[root@k8s-master ~]# kubectl set image deploy nginx-deploy nginx=nginx:1.19.2 --record

Flag --record has been deprecated, --record will be removed in the future

deployment.apps/nginx-deploy image updated

监控窗口输出:

......

nginx-deploy-86b5d68f7b-g8v2n 0/1 Pending 0 0s

nginx-deploy-86b5d68f7b-fnj68 0/1 Pending 0 0s

nginx-deploy-86b5d68f7b-g8v2n 0/1 Pending 0 0s

nginx-deploy-86b5d68f7b-fnj68 0/1 Pending 0 0s

nginx-deploy-86b5d68f7b-g8v2n 0/1 ContainerCreating 0 0s

nginx-deploy-86b5d68f7b-fnj68 0/1 ContainerCreating 0 0s

nginx-deploy-86b5d68f7b-5mnsp 0/1 Pending 0 0s

nginx-deploy-86b5d68f7b-5mnsp 0/1 Pending 0 0s

nginx-deploy-86b5d68f7b-5mnsp 0/1 ContainerCreating 0 0s

nginx-deploy-d845cc945-75d5h 1/1 Terminating 0 22m

nginx-deploy-d845cc945-75d5h 0/1 Terminating 0 22m

nginx-deploy-86b5d68f7b-fnj68 0/1 ContainerCreating 0 1s

nginx-deploy-86b5d68f7b-g8v2n 0/1 ContainerCreating 0 1s

nginx-deploy-86b5d68f7b-5mnsp 0/1 ContainerCreating 0 1s

nginx-deploy-d845cc945-75d5h 0/1 Terminating 0 22m

nginx-deploy-d845cc945-75d5h 0/1 Terminating 0 22m

......

可以看到是属于滚动升级,deployment 始终保证有一个服务是正常运行的。

查看 deployment 的历史部署信息

kubectl rollout history deploy nginx-deploy

[root@k8s-master k8s]# kubectl rollout history deploy nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

1 <none>

2 kubectl set image deploy nginx-deploy nginx=nginx:1.19.2 --record=true

查看指定版本的信息:

kubectl rollout history deploy nginx-deploy --revision=2

[root@k8s-master k8s]# kubectl rollout history deploy nginx-deploy --revision=2

deployment.apps/nginx-deploy with revision #2

Pod Template:

Labels: app=nginx-deploy

pod-template-hash=86b5d68f7b

Annotations: kubernetes.io/change-cause: kubectl set image deploy nginx-deploy nginx=nginx:1.19.2 --record=true

Containers:

nginx:

Image: nginx:1.19.2

Port: <none>

Host Port: <none>

Environment: <none>

Mounts: <none>

Volumes: <none>

rollout undo 回滚到指定版本

# 默认回滚到上个版本

kubectl rollout undo deploy nginx-deploy

参数:

--to-revision:指定回滚到哪个版本,默认值是0,回滚到上个版本

[root@k8s-master k8s]# kubectl rollout undo deploy nginx-deploy

deployment.apps/nginx-deploy rolled back

[root@k8s-master k8s]# kubectl rollout history deploy nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

2 kubectl set image deploy nginx-deploy nginx=nginx:1.19.2 --record=true

3 <none>

[root@k8s-master k8s]# kubectl rollout undo deploy nginx-deploy --to-revision=2

deployment.apps/nginx-deploy rolled back

[root@k8s-master k8s]# kubectl rollout history deploy nginx-deploy

deployment.apps/nginx-deploy

REVISION CHANGE-CAUSE

3 <none>

4 kubectl set image deploy nginx-deploy nginx=nginx:1.19.2 --record=true

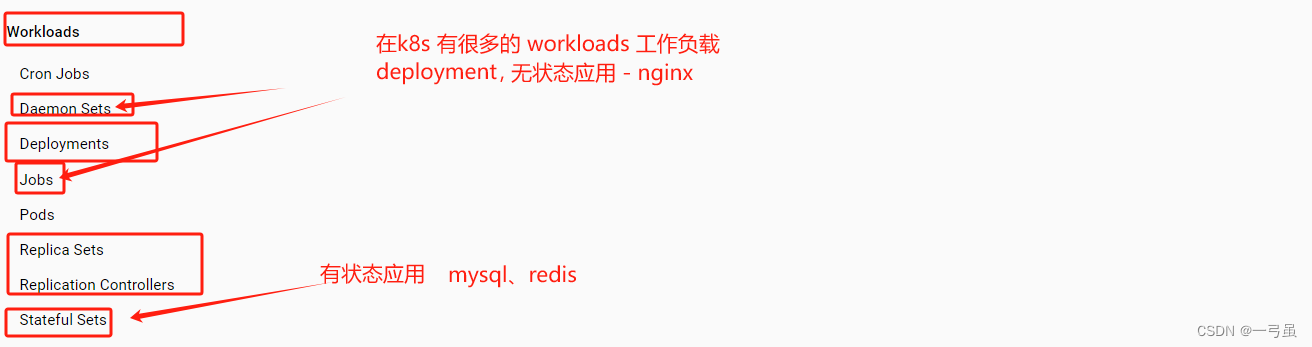

总结:

负责工作负载:是一个部署

Deployment:无状态应用部署,微服务,提供一些副本功能

StatefulSet:有状态应用,redis、mysql、 提供稳定的存储和网络等等

DaemonSet:守护型应用部署,比如日志,每个机器都会运行一份。

Job/CronJob:定时任务部署,垃圾回收清理,日志保存,邮件,数据库备份, 可以在指定时间运行。

apiVersion:

kind: Deployment / StatefulSet / DaemonSet / CronJob

metadata:

spec:

service

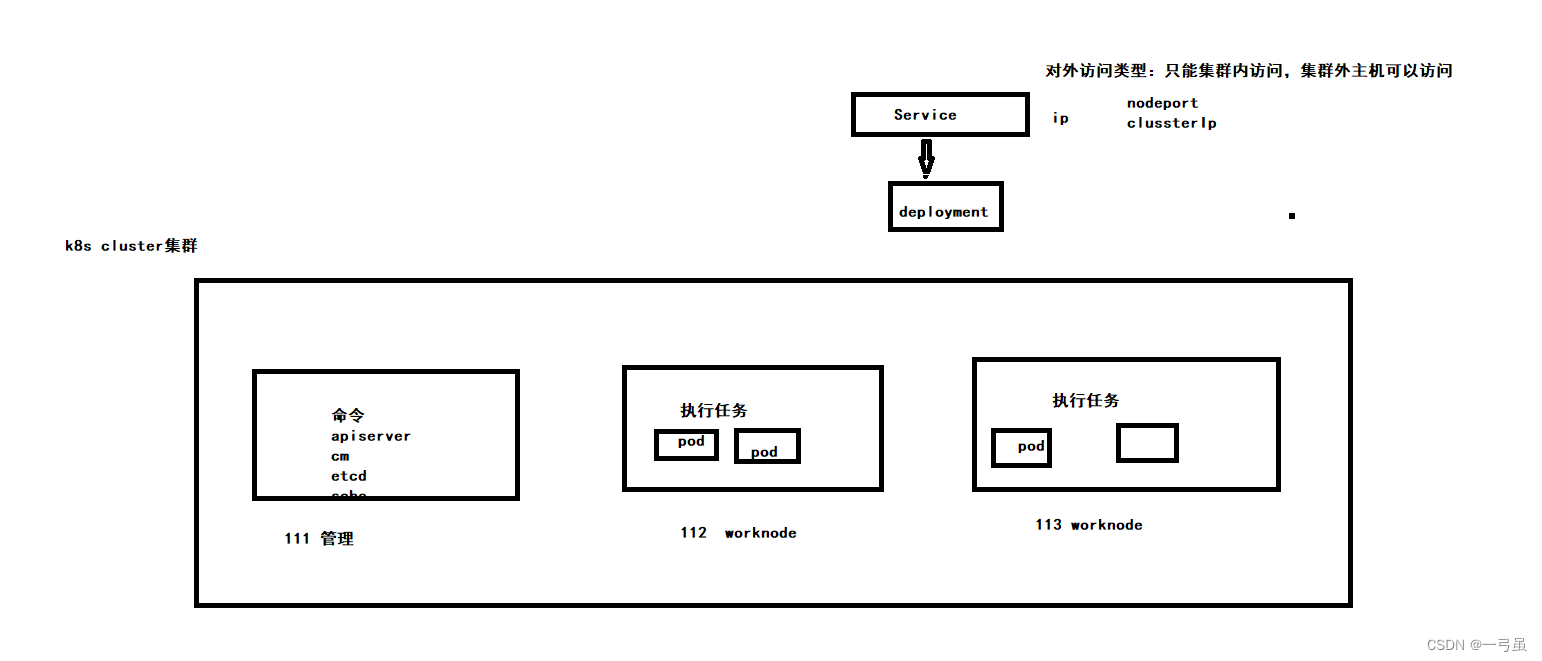

目前为止,我们部署的所有应用目前并不能通过浏览器访问

在前面讲解 pod 时知道,pod 的生命周期比较短,其生命周期可以用朝生夕死来形容,这就造成了提供服务的 pod 的 ip 地址频繁变化。而在访问服务时,我们期望提供服务的 ip 地址是稳定不变的。由上描述可知,pod 的特性和人们的期望就发生了严重的冲突。此冲突就引出了 service。

service:pod的服务发现和负载均衡

基本操作

首先清除所有 deployment

[root@k8s-master k8s]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-deploy 5/5 5 5 78m

[root@k8s-master k8s]# kubectl delete deploy nginx-deploy

deployment.apps "nginx-deploy" deleted

部署三个 nginx pod

[root@k8s-master k8s]# kubectl create deploy web-nginx --image=nginx --replicas=3

deployment.apps/web-nginx created

[root@k8s-master k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-nginx-5f989946d-2lbc4 1/1 Running 0 33s

web-nginx-5f989946d-hgxm8 1/1 Running 0 33s

web-nginx-5f989946d-m5cqq 1/1 Running 0 33s

全部启动之后进入容器内部,修改 html

# 分别进入3个 pod 下的 nginx 容器

kubectl exec -it web-nginx-5f989946d-2lbc4 /bin/bash

kubectl exec -it web-nginx-5f989946d-hgxm8 /bin/bash

kubectl exec -it web-nginx-5f989946d-m5cqq /bin/bash

# 进入到html文件所在目录

# 全部执行

cd /usr/share/nginx/html

# 分别执行

echo "web-nginx-111" > index.html

echo "web-nginx-222" > index.html

echo "web-nginx-333" > index.html

# 查看 ip

[root@k8s-master k8s]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-nginx-5f989946d-2lbc4 1/1 Running 0 11m 192.169.169.145 k8s-node2 <none> <none>

web-nginx-5f989946d-hgxm8 1/1 Running 0 11m 192.169.36.89 k8s-node1 <none> <none>

web-nginx-5f989946d-m5cqq 1/1 Running 0 11m 192.169.36.90 k8s-node1 <none> <none>

# 访问查看修改后的内容

[root@k8s-master k8s]# curl 192.169.169.145

web-nginx-111

[root@k8s-master k8s]# curl 192.169.36.89

web-nginx-222

[root@k8s-master k8s]# curl 192.169.36.90

web-nginx-333

这里根据 deployment 使用命令kubectl expose创建 service

# kubectl expose deploy deployment名 --port=service暴露的端口 --target-port=pod内容器的端口

kubectl expose deploy web-nginx --port=8000 --target-port=80

[root@k8s-master k8s]# kubectl expose deploy web-nginx --port=8000 --target-port=80

service/web-nginx exposed

# 默认只查看 default 下的所有 service

kubectl get svc

[root@k8s-master k8s]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 23h

web-nginx ClusterIP 10.96.12.5 <none> 8000/TCP 56s

接下来我们使用 service 的 ip 来访问 nginx 服务

[root@k8s-master k8s]# curl 10.96.12.5:8000

web-nginx-333

[root@k8s-master k8s]# curl 10.96.12.5:8000

web-nginx-111

[root@k8s-master k8s]# curl 10.96.12.5:8000

web-nginx-222

[root@k8s-master k8s]# curl 10.96.12.5:8000

web-nginx-111

[root@k8s-master k8s]# curl 10.96.12.5:8000

web-nginx-333

[root@k8s-master k8s]# curl 10.96.12.5:8000

web-nginx-333

[root@k8s-master k8s]# curl 10.96.12.5:8000

web-nginx-222

可以看到,访问的 nginx 服务是随机的

还可以通过域名来访问,不过只能在容器内部,集群中无法通过域名访问

项目中的服务,在通过 service 调用的时候,可以直接在代码里面写域名访问

service 每次创建 ip 都会变化,但是在程序需要一个不变的地址,就通过域名来访问

service 的域名格式:service服务名.namespace空间.svc:端口

[root@k8s-master k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-nginx-5f989946d-2lbc4 1/1 Running 0 57m

web-nginx-5f989946d-hgxm8 1/1 Running 0 57m

web-nginx-5f989946d-m5cqq 1/1 Running 0 57m

[root@k8s-master k8s]# kubectl exec -it web-nginx-5f989946d-2lbc4 /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@web-nginx-5f989946d-2lbc4:/# curl web-nginx.default.svc:8000

web-nginx-333

root@web-nginx-5f989946d-2lbc4:/# curl web-nginx.default.svc:8000

web-nginx-111

root@web-nginx-5f989946d-2lbc4:/# curl web-nginx.default.svc:8000

web-nginx-333

root@web-nginx-5f989946d-2lbc4:/# curl web-nginx.default.svc:8000

web-nginx-333

root@web-nginx-5f989946d-2lbc4:/# curl web-nginx.default.svc:8000

web-nginx-111

root@web-nginx-5f989946d-2lbc4:/# curl web-nginx.default.svc:8000

web-nginx-111

root@web-nginx-5f989946d-2lbc4:/# curl web-nginx.default.svc:8000

web-nginx-333

root@web-nginx-5f989946d-2lbc4:/# curl web-nginx.default.svc:8000

web-nginx-333

root@web-nginx-5f989946d-2lbc4:/# curl web-nginx.default.svc:8000

web-nginx-111

root@web-nginx-5f989946d-2lbc4:/# curl web-nginx.default.svc:8000

web-nginx-222

kubectl describe svc web-nginx

[root@k8s-master k8s]# kubectl describe svc web-nginx

Name: web-nginx

Namespace: default

Labels: app=web-nginx

Annotations: <none>

Selector: app=web-nginx # 查询哪些 pod 的标签带了 app=web-nginx,映射逻辑

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.96.12.5 # 对外访问的 ip 地址

IPs: 10.96.12.5

Port: <unset> 8000/TCP # 对外访问的端口

TargetPort: 80/TCP

Endpoints: 192.169.169.145:80,192.169.36.89:80,192.169.36.90:80

Session Affinity: None

Events: <none>

kubectl delete svc web-nginx

[root@k8s-master k8s]# kubectl delete svc web-nginx

service "web-nginx" deleted

我们目前创建的 serivce 都只能在集群内部访问,无法在浏览器访问

在企业中我们希望 web 服务是对外暴露,用户可以访问的。

而 redis,mysql,mq 等,我们只希望在这些服务只能在内部使用,不对外暴露。

如果想要做到这点,我们在创建 service 需要查看一个参数--type

--type='':

Type for this service: ClusterIP, NodePort, LoadBalancer, or ExternalName. Default is 'ClusterIP'.

可以看到默认配置是 ClusterIP,也就是不对外暴露,只能在集群内部访问

我们可以使用配置 NodePort,NodePort 类型的 service 允许从集群外部通过节点的 ip 地址和分配的端口号访问 service

# 创建 service

[root@k8s-master k8s]# kubectl expose deploy web-nginx --port=8000 --target-port=80 --type=NodePort

service/web-nginx exposed

# 查看 default 下的所有 service

[root@k8s-master k8s]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 23h

web-nginx NodePort 10.96.188.73 <none> 8000:32435/TCP 29s

可以看到 web-nginx 的 port 除了容器内部访问的 8000,还多了一个32435,这就是可以对外访问的端口,可以通过集群的任意节点访问这个 service

这样我们就可以打开浏览器,输入任意节点的ip地址:32435就可以访问 nginx 了,如果访问不了,需要设置阿里云安全组

ClusterIP:默认类型,自动分配一个仅 Cluster 内部可以访问的虚拟 IP

NodePort:在 ClusterIP 基础上为 Service 在每台机器上绑定一个端口,这样就可以通过 ip: NodePort 来访问该服务。

LoadBalancer:在 NodePort 的基础上,借助 Cloud Provider 创建一个外部负载均衡器,并将请求转发到 NodePort

ExternalName:把集群外部的服务引入到集群内部来,在集群内部直接使用。没有任何类型代理被创建,这只有 Kubernetes 1.7 或更高版本的kube-dns 才支持。

我们创建 service 的 ip 是 10.96.188.73,细心的小伙伴可以发现

我们在初始化 master 节点时,执行了以下命令:

kubeadm init \

--apiserver-advertise-address=192.168.0.1 \

--control-plane-endpoint=cluster-master \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \

--kubernetes-version v1.28.2 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=192.169.0.0/16 \

--cri-socket unix:///var/run/cri-dockerd.sock

是在这里面我们指定了 service 的 ip 范围

k8s在内部有一套自己的网络管理系统,内部网络将我们所有的服务连接在一起

编辑文件my-service.yaml:

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

selector:

app: web-nginx # 需要与deployment的标签匹配

ports:

- protocol: TCP

port: 8000

targetPort: 80

type: NodePort

[root@k8s-master k8s]# kubectl apply -f my-service.yaml

service/my-service created

[root@k8s-master k8s]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 23h

my-service NodePort 10.96.78.150 <none> 8000:30812/TCP 2m37s

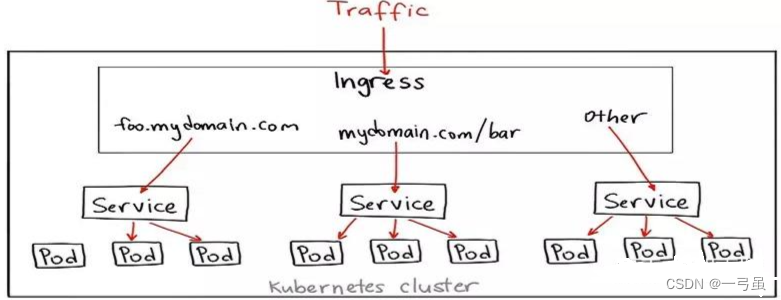

ingress

官方文档:https://kubernetes.io/zh-cn/docs/concepts/services-networking/ingress/

官网地址:https://kubernetes.github.io/ingress-nginx/,ingress 就是 nginx 做的。

安装文档:https://kubernetes.github.io/ingress-nginx/deploy/

什么是 ingress

ingress:service 的统一网关入口

K8s 的 pod 和 service 需要通过 NodePort 把服务暴露到外部, 但是随着微服务的增多。 端口会变得不好管理。 所以通常情况下我们会设计一个 ingress 来做路由的转发,方便统一管理。效果如图:

ingress 主要分为两部分

- ingress controller 是流量的入口,是一个实体软件, 一般是 nginx 和 Haproxy 。

- ingress 描述具体的路由规则。

ingress作用:

-

基于 http-header 的路由

-

基于 path 的路由

-

单个 ingress 的 timeout

-

请求速率 limit

-

rewrite 规则

安装 ingress

# 安装ingress

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.8.2/deploy/static/provider/cloud/deploy.yaml

如果网站无法访问则无法下载,使用下面 yaml 文件

编辑文件ingress.yaml:

apiVersion: v1

kind: Namespace

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

name: ingress-nginx

---

apiVersion: v1

automountServiceAccountToken: true

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- namespaces

verbs:

- get

- apiGroups:

- ""

resources:

- configmaps

- pods

- secrets

- endpoints

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resourceNames:

- ingress-nginx-leader

resources:

- configmaps

verbs:

- get

- update

- apiGroups:

- ""

resources:

- configmaps

verbs:

- create

- apiGroups:

- coordination.k8s.io

resourceNames:

- ingress-nginx-leader

resources:

- leases

verbs:

- get

- update

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- create

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-admission

namespace: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- secrets

verbs:

- get

- create

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx

rules:

- apiGroups:

- ""

resources:

- configmaps

- endpoints

- nodes

- pods

- secrets

- namespaces

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes

verbs:

- get

- apiGroups:

- ""

resources:

- services

verbs:

- get

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- events

verbs:

- create

- patch

- apiGroups:

- networking.k8s.io

resources:

- ingresses/status

verbs:

- update

- apiGroups:

- networking.k8s.io

resources:

- ingressclasses

verbs:

- get

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-admission

rules:

- apiGroups:

- admissionregistration.k8s.io

resources:

- validatingwebhookconfigurations

verbs:

- get

- update

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-admission

namespace: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx

subjects:

- kind: ServiceAccount

name: ingress-nginx

namespace: ingress-nginx

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-admission

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: ingress-nginx-admission

subjects:

- kind: ServiceAccount

name: ingress-nginx-admission

namespace: ingress-nginx

---

apiVersion: v1

data:

allow-snippet-annotations: "true"

kind: ConfigMap

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-controller

namespace: ingress-nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

externalTrafficPolicy: Local

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- appProtocol: http

name: http

port: 80

protocol: TCP

targetPort: http

- appProtocol: https

name: https

port: 443

protocol: TCP

targetPort: https

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: LoadBalancer

---

apiVersion: v1

kind: Service

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-controller-admission

namespace: ingress-nginx

spec:

ports:

- appProtocol: https

name: https-webhook

port: 443

targetPort: webhook

selector:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

type: ClusterIP

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-controller

namespace: ingress-nginx

spec:

minReadySeconds: 0

revisionHistoryLimit: 10

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

spec:

containers:

- args:

- /nginx-ingress-controller

- --publish-service=$(POD_NAMESPACE)/ingress-nginx-controller

- --election-id=ingress-nginx-leader

- --controller-class=k8s.io/ingress-nginx

- --ingress-class=nginx

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

- --validating-webhook=:8443

- --validating-webhook-certificate=/usr/local/certificates/cert

- --validating-webhook-key=/usr/local/certificates/key

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: LD_PRELOAD

value: /usr/local/lib/libmimalloc.so

image: cnych/ingress-nginx:v1.5.1

imagePullPolicy: IfNotPresent

lifecycle:

preStop:

exec:

command:

- /wait-shutdown

livenessProbe:

failureThreshold: 5

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

name: controller

ports:

- containerPort: 80

name: http

protocol: TCP

- containerPort: 443

name: https

protocol: TCP

- containerPort: 8443

name: webhook

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

memory: 90Mi

securityContext:

allowPrivilegeEscalation: true

capabilities:

add:

- NET_BIND_SERVICE

drop:

- ALL

runAsUser: 101

volumeMounts:

- mountPath: /usr/local/certificates/

name: webhook-cert

readOnly: true

dnsPolicy: ClusterFirst

nodeSelector:

kubernetes.io/os: linux

serviceAccountName: ingress-nginx

terminationGracePeriodSeconds: 300

volumes:

- name: webhook-cert

secret:

secretName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-admission-create

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-admission-create

spec:

containers:

- args:

- create

- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc

- --namespace=$(POD_NAMESPACE)

- --secret-name=ingress-nginx-admission

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: cnych/ingress-nginx-kube-webhook-certgen:v20220916-gd32f8c343

imagePullPolicy: IfNotPresent

name: create

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: batch/v1

kind: Job

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-admission-patch

namespace: ingress-nginx

spec:

template:

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-admission-patch

spec:

containers:

- args:

- patch

- --webhook-name=ingress-nginx-admission

- --namespace=$(POD_NAMESPACE)

- --patch-mutating=false

- --secret-name=ingress-nginx-admission

- --patch-failure-policy=Fail

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

image: cnych/ingress-nginx-kube-webhook-certgen:v20220916-gd32f8c343

imagePullPolicy: IfNotPresent

name: patch

securityContext:

allowPrivilegeEscalation: false

nodeSelector:

kubernetes.io/os: linux

restartPolicy: OnFailure

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 2000

serviceAccountName: ingress-nginx-admission

---

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: nginx

spec:

controller: k8s.io/ingress-nginx

---

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:

labels:

app.kubernetes.io/component: admission-webhook

app.kubernetes.io/instance: ingress-nginx

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

app.kubernetes.io/version: 1.5.1

name: ingress-nginx-admission

webhooks:

- admissionReviewVersions:

- v1

clientConfig:

service:

name: ingress-nginx-controller-admission

namespace: ingress-nginx

path: /networking/v1/ingresses

failurePolicy: Fail

matchPolicy: Equivalent

name: validate.nginx.ingress.kubernetes.io

rules:

- apiGroups:

- networking.k8s.io

apiVersions:

- v1

operations:

- CREATE

- UPDATE

resources:

- ingresses

sideEffects: None

执行 yaml 文件,下载 ingress

[root@k8s-master k8s]# vim ingress.yaml

[root@k8s-master k8s]# kubectl apply -f ingress.yaml

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

serviceaccount/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

configmap/ingress-nginx-controller created

service/ingress-nginx-controller created

service/ingress-nginx-controller-admission created

deployment.apps/ingress-nginx-controller created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

可以看到 namespace 为 ingress-nginx,查看一下它的 pod、service 和 deployment

[root@k8s-master k8s]# kubectl get deploy -n ingress-nginx

NAME READY UP-TO-DATE AVAILABLE AGE

ingress-nginx-controller 1/1 1 1 2m25s

[root@k8s-master k8s]# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.96.30.238 <pending> 80:32726/TCP,443:30681/TCP 2m31s

ingress-nginx-controller-admission ClusterIP 10.96.155.54 <none> 443/TCP 2m31s

[root@k8s-master k8s]# kubectl get pod -n ingress-nginx

NAME READY STATUS RESTARTS AGE

ingress-nginx-admission-create-nlwsf 0/1 Completed 0 3m12s

ingress-nginx-admission-patch-2g25m 0/1 Completed 3 3m12s

ingress-nginx-controller-7598486d5d-7chkw 1/1 Running 0 3m12s

k8s 会动创建两个 nodeport 。一个80 (http),一个443 (https)

ingress-nginx-controller 对外暴露服务的

ingress-nginx-controller-admission,准入控制器,限制,请求不符合ingress对象,拒绝请求…

核心是 pod/ingress-nginx-controller

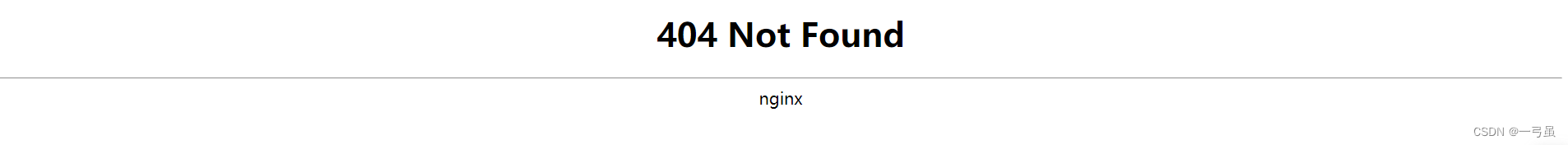

服务创建成功,首先在集群内访问:

[root@k8s-master k8s]# kubectl get pod -o wide -n ingress-nginx

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create-nlwsf 0/1 Completed 0 8m10s 192.169.169.146 k8s-node2 <none> <none>

ingress-nginx-admission-patch-2g25m 0/1 Completed 3 8m10s 192.169.36.91 k8s-node1 <none> <none>

ingress-nginx-controller-7598486d5d-7chkw 1/1 Running 0 8m10s 192.169.36.92 k8s-node1 <none> <none>

[root@k8s-master k8s]# curl 192.169.36.92

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx</center>

</body>

</html>

集群内可以访问成功,接下来在浏览器访问:

[root@k8s-master k8s]# kubectl get svc -o wide -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

ingress-nginx-controller LoadBalancer 10.96.30.238 <pending> 80:32726/TCP,443:30681/TCP 9m14s app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

ingress-nginx-controller-admission ClusterIP 10.96.155.54 <none> 443/TCP 9m14s app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

这里面我们可以看到 ingress-nginx-controller 的类型是 LoadBalancer,也就是说只能从这个 pod 部署的节点可以访问

ingress-nginx-controller-7598486d5d-7chkw 1/1 Running 0 8m10s 192.169.36.92 k8s-node1 <none> <none>

上面我们看到,这个服务是部署在 node1 这个节点上,在浏览器输入下面两个任意一个即可

http://node1ip地址:32726

https://node1ip地址:30681

ingress 基本命令

首先删除 default 空间下的 svc 和 deployment

# 创建两个 deployment

[root@k8s-master k8s]# kubectl create deployment web-nginx --image=nginx --replicas=2

deployment.apps/web-nginx created

[root@k8s-master k8s]# kubectl create deployment web-tomcat --image=tomcat:8.5.92 --replicas=2

deployment.apps/web-tomcat created

[root@k8s-master k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

web-nginx-5f989946d-6krjl 1/1 Running 0 84s

web-nginx-5f989946d-dhstb 1/1 Running 0 84s

web-tomcat-758dc4ddf6-bgk2b 1/1 Running 0 65s

web-tomcat-758dc4ddf6-f2tjh 1/1 Running 0 65s

# 创建两个 service

[root@k8s-master k8s]# kubectl expose deploy web-nginx --port=8000 --target-port=80

service/web-nginx exposed

[root@k8s-master k8s]# kubectl expose deploy web-tomcat --port=8080 --target-port=8080

service/web-tomcat exposed

[root@k8s-master k8s]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

web-nginx-5f989946d-6krjl 1/1 Running 0 2m9s 192.169.36.93 k8s-node1 <none> <none>

web-nginx-5f989946d-dhstb 1/1 Running 0 2m9s 192.169.169.147 k8s-node2 <none> <none>

web-tomcat-758dc4ddf6-bgk2b 1/1 Running 0 110s 192.169.36.94 k8s-node1 <none> <none>

web-tomcat-758dc4ddf6-f2tjh 1/1 Running 0 110s 192.169.169.148 k8s-node2 <none> <none>

[root@k8s-master k8s]# kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 25h <none>

web-nginx ClusterIP 10.96.94.113 <none> 8000/TCP 27s app=web-nginx

web-tomcat ClusterIP 10.96.44.167 <none> 8080/TCP 19s app=web-tomcat

# 测试本地访问

[root@k8s-master k8s]# curl 10.96.94.113:8000

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

[root@k8s-master k8s]# curl 10.96.44.167:8080

<!doctype html><html lang="en"><head><title>HTTP Status 404 – Not Found</title><style type="text/css">body {font-family:Tahoma,Arial,sans-serif;} h1, h2, h3, b {color:white;background-color:#525D76;} h1 {font-size:22px;} h2 {font-size:16px;} h3 {font-size:14px;} p {font-size:12px;} a {color:black;} .line {height:1px;background-color:#525D76;border:none;}</style></head><body><h1>HTTP Status 404 – Not Found</h1><hr class="line" /><p><b>Type</b> Status Report</p><p><b>Description</b> The origin server did not find a current representation for the target resource or is not willing to disclose that one exists.</p><hr class="line" /><h3>Apache Tomcat/8.5.92</h3></body></html>

内部可以访问了,我们要给内部服务做负载均衡 ingress了

编辑文件my-ingress.yaml:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "nginx.yigongsui.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: web-nginx

port:

number: 8000

- host: "tomcat.yigongsui.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: web-tomcat

port:

number: 8080

[root@k8s-master k8s]# kubectl apply -f my-ingress.yaml

ingress.networking.k8s.io/ingress-host-bar created

上面的 yaml 文件设置域名nginx.yigongsui.com映射到 web-nginx 的服务,端口是8000,域名tomcat.yigongsui.com映射到 web-tomcat 服务,端口是8080

kubectl get ingress

[root@k8s-master k8s]# kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress-host-bar nginx nginx.yigongsui.com,tomcat.yigongsui.com 80 19s

由于我们要使用域名进行访问,在本机中我们可以通过在 hosts 文件中添加域名映射的方式来进行测试

在 hosts 文件中添加:

120.27.160.240 nginx.yigongsui.com

120.27.160.240 tomcat.yigongsui.com

ip 是 node1 节点的 ip,因为 ingress-nginx-controller 是部署在 node1 节点上

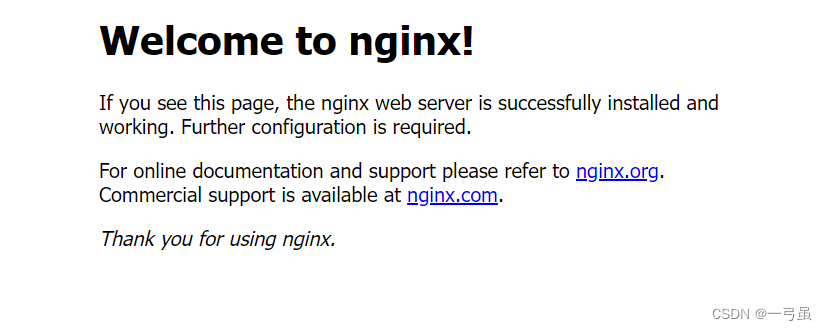

配置完成后,我们通过域名来访问 nginx 和 tomcat

# 访问 nginx,两者任选其一即可

http://nginx.yigongsui.com:32726

https://nginx.yigongsui.com:30681

# 访问 tomcat

http://tomcat.yigongsui.com:32726

https://tomcat.yigongsui.com:30681

Annotations地址:https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/annotations/

重新编辑my-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-host-bar

# 一些ingress的规则配置,都直接在这里写即可!

annotations:

nginx.ingress.kubernetes.io/limit-rps: "1"

spec:

ingressClassName: nginx

rules:

- host: "nginx.yigongsui.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: web-nginx

port:

number: 8000

- host: "tomcat.yigongsui.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: web-tomcat

port:

number: 8080

[root@k8s-master k8s]# kubectl apply -f my-ingress.yaml

ingress.networking.k8s.io/ingress-host-bar configured

配置完成后,重复刷新页面,会看到:

可以看到已经被限流了

具体的其他使用,学习 nginx 相关内容即可,然后查看 ingress 的替代命令!

annotations 配置,通用配置,https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/annotations/

rules:具体请求的规则,https://kubernetes.github.io/ingress-nginx/user-guide/ingress-path-matching/

案例:创建一个 mysql pod

编写 yaml 文件my-mysql.yaml:

apiVersion: v1

kind: Pod

metadata:

name: mysql-pod

spec:

containers:

- name: mysql

image: mysql:5.7

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

ports:

- containerPort: 3306

volumeMounts:

- mountPath: /var/lib/mysql

name: data-volume

volumes:

- name: data-volume

hostPath:

path: /home/mysql/data

type: DirectoryOrCreate

这个文件中设置了数据卷挂载,容器内目录/var/lib/mysql挂载到宿主机上的/home/mysql/data上

[root@k8s-master k8s]# kubectl apply -f my-mysql.yaml

pod/mysql-pod created

[root@k8s-master k8s]# kubectl get pod

NAME READY STATUS RESTARTS AGE

mysql-pod 1/1 Running 0 39s

web-nginx-5f989946d-6krjl 1/1 Running 0 140m

web-nginx-5f989946d-dhstb 1/1 Running 0 140m

web-tomcat-758dc4ddf6-bgk2b 1/1 Running 0 140m

web-tomcat-758dc4ddf6-f2tjh 1/1 Running 0 140m

pod 启动成功,我们进入容器内部

[root@k8s-master k8s]# kubectl exec -it mysql-pod /bin/bash

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

root@mysql-pod:/#

连接数据库,密码是123456,用户名是 root:

root@mysql-pod:/# mysql -uroot -p123456

mysql: [Warning] Using a password on the command line interface can be insecure.

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 2

Server version: 5.7.36 MySQL Community Server (GPL)

Copyright (c) 2000, 2021, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

+--------------------+

4 rows in set (0.00 sec)

可以看到数据库能正常使用了

我们查看一下 mysql-pod 部署在哪个节点上

[root@k8s-master home]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mysql-pod 1/1 Running 0 4m25s 192.169.169.149 k8s-node2 <none> <none>

web-nginx-5f989946d-6krjl 1/1 Running 0 144m 192.169.36.93 k8s-node1 <none> <none>

web-nginx-5f989946d-dhstb 1/1 Running 0 144m 192.169.169.147 k8s-node2 <none> <none>

web-tomcat-758dc4ddf6-bgk2b 1/1 Running 0 144m 192.169.36.94 k8s-node1 <none> <none>

web-tomcat-758dc4ddf6-f2tjh 1/1 Running 0 144m 192.169.169.148 k8s-node2 <none> <none>

可以看到部署在 node2 节点上,打开 node2 服务器,查看/home目录是不是多了个mysql目录

[root@k8s-node2 ~]# cd /home

[root@k8s-node2 home]# ll

total 4

drwxr-xr-x 3 root root 4096 Mar 25 19:39 mysql

可以看到确实多了mysql目录

我们这个 mysql 案例就算完成

mysql使用过程中,数据持久化在 k8s 中如何保证唯一性?

我们案例的 mysql pod 是部署在 node1 节点,数据也在 node1 上,如果这个 mysql pod 重启后部署在了 node2 节点上,这样数据不就丢失了

这就需要我们用到 k8s 的存储卷技术了

我们 yaml 文件中的 hostPath 就是一种存储卷技术

hostPath 卷将主机节点上的文件或目录挂载到 pod 中,仅用于在单节点集群上进行开发和测试,不适用于多节点集群;例如,当 pod被重新创建时,可能会被调度到与原先不同的节点上,导致新的 pod 没有数据。

hostPath 的 type 值:

| DirectoryOrCreate | 目录不存在则自动创建。 |

|---|---|

| Directory | 挂载已存在目录。不存在会报错。 |

| FileOrCreate | 文件不存在则自动创建。不会自动创建文件的父目录,必须确保文件路径已经存在。 |

| File | 挂载已存在的文件。不存在会报错。 |

| Socket | 挂载 UNIX套接字。例如挂载/var/run/docker.sock进程 |

集群与单机的冲突,导致本地的数据挂载在k8s几乎无用,日志,单机项目!守护进程

如果清空,对应的数据就会清空

接下来,我们详细介绍一下存储卷技术