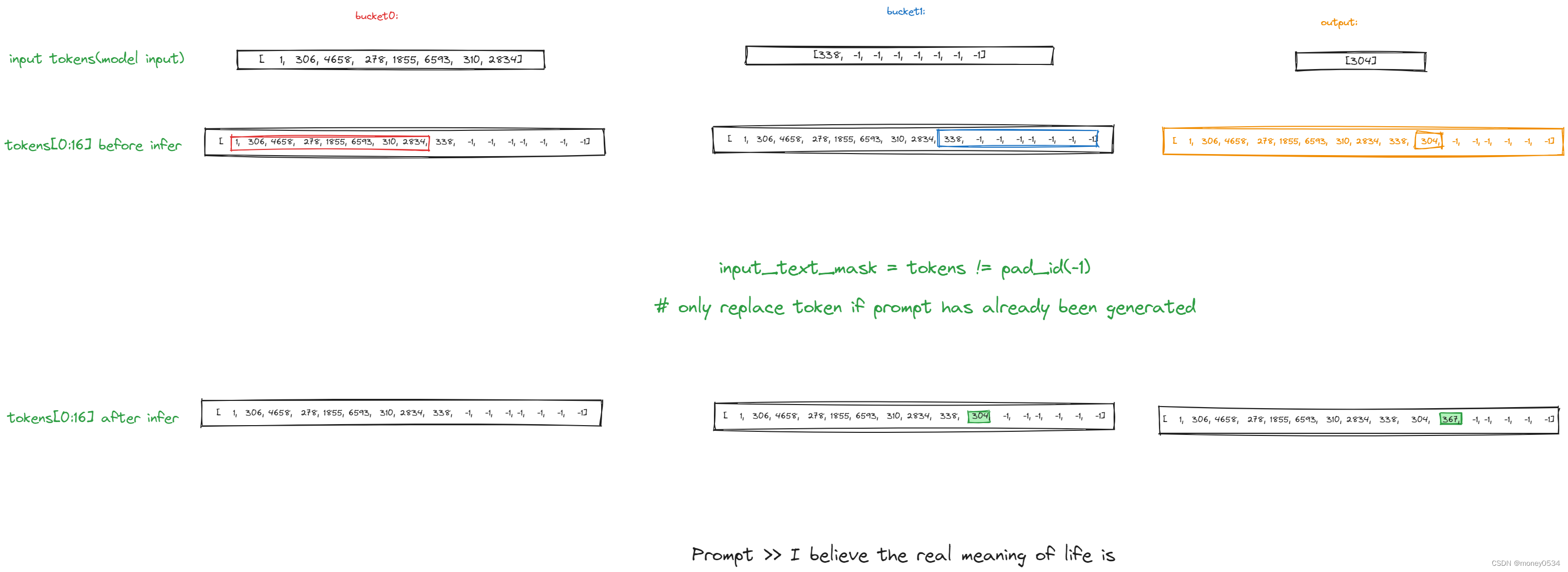

数据更新:

python脚本(注意分支):

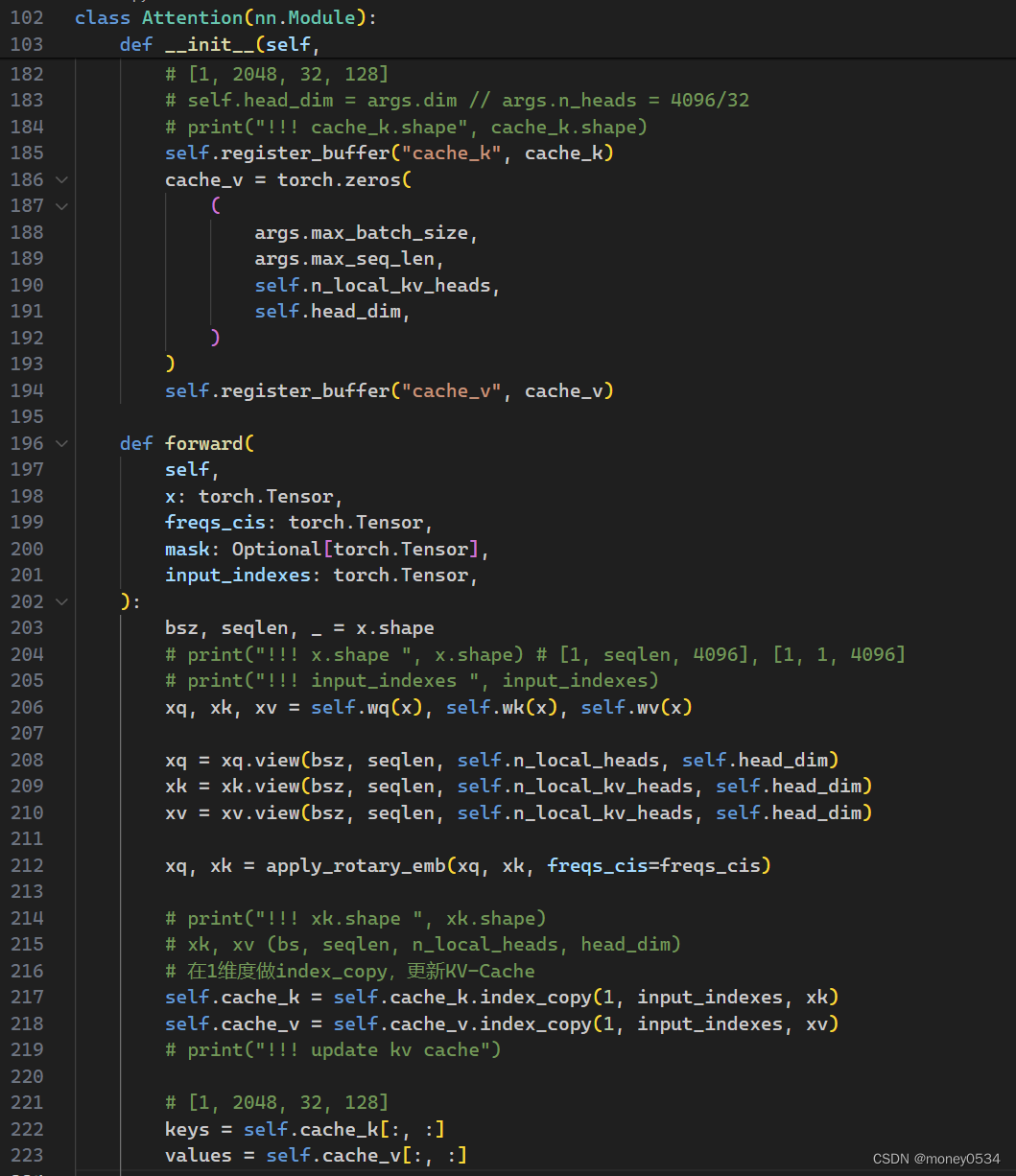

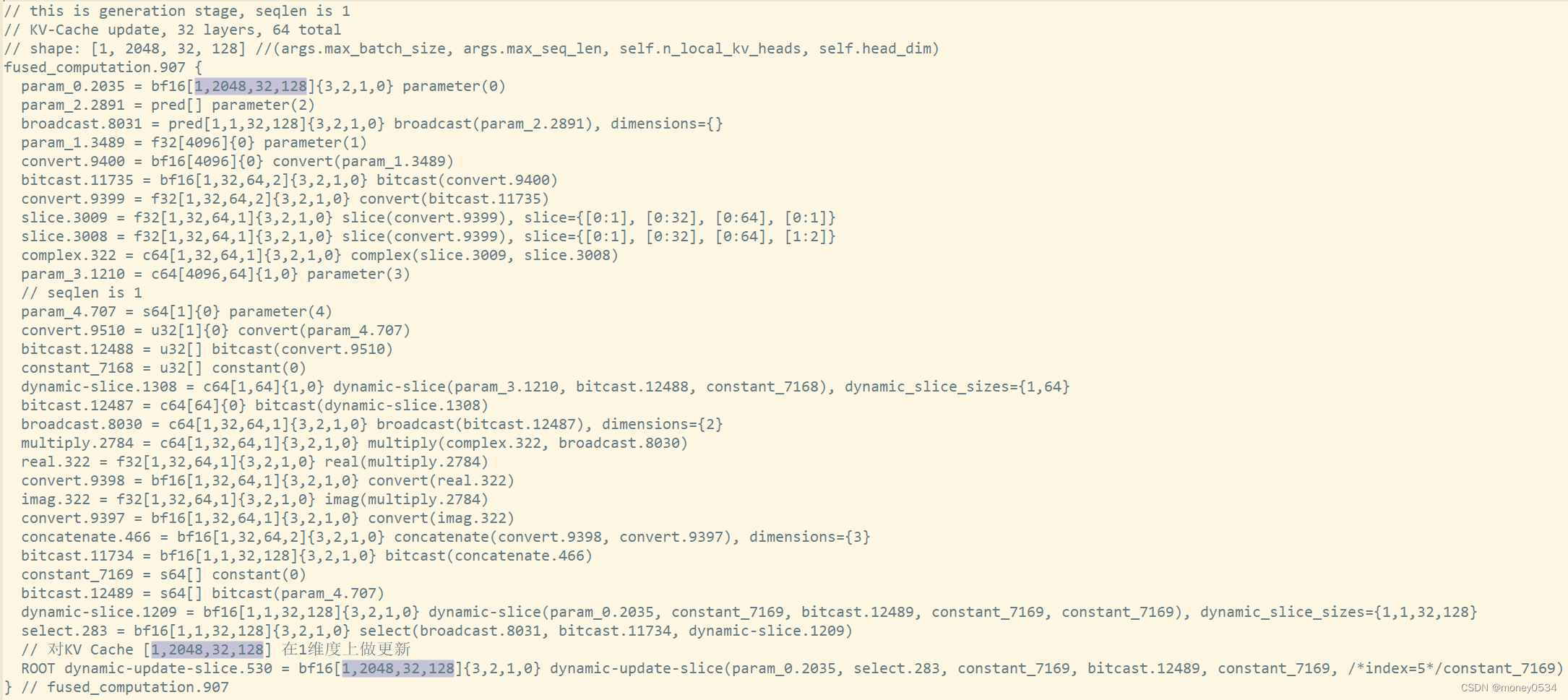

HLO图分析KV-Cache更新:

KV-Cache作为HLO图的输入输出:bf16[1,2048,32,128]{3,2,1,0} 128x, 2x32x2

参考链接

notes for transformer introduction by an Italian teaching in China: Attention is all you need (Transformer) - Model explanation (including math), Inference and Training

notes for and LLaMa: LLaMA explained: KV-Cache, Rotary Positional Embedding, RMS Norm, Grouped Query Attention, SwiGLU

github 使用XLA_GPU,选择分支llama2-google-next-inference

pytorch.org: path-achieve-low-inference-latency