一、目录

1 框架

2. 入门

3. 安装教程

4. 相关文档、案例阅读

二、实现

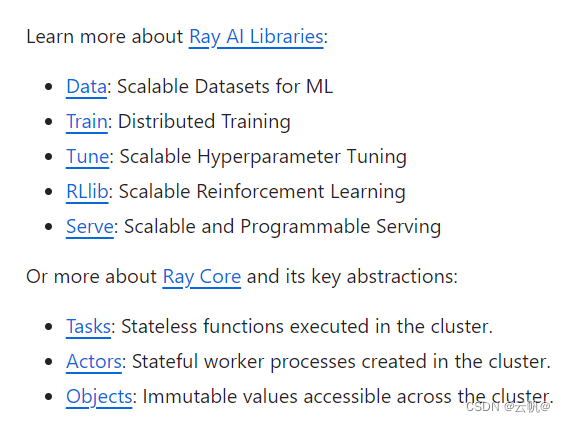

1 框架:Ray:将一个模型拆分到多个显卡中,实现分布式预测、训练等功能。

2. 入门 :

案例:通过ray 实现分布式部署,分布式推理服务。

参考:https://zhuanlan.zhihu.com/p/647973148?utm_id=0

文件名:test.py

pip install ray

pip install “ray[serve]”

import pandas as pd

import ray

from ray import serve

from starlette.requests import Request

@serve.deployment(ray_actor_options={"num_gpus": 2}) #两个gpu 将模型拆分,进行推理

class PredictDeployment:

def __init__(self, model_id: str):

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

self.model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.float16,

device_map="auto",

)

self.tokenizer = AutoTokenizer.from_pretrained(model_id)

def generate(self, text: str) -> pd.DataFrame:

input_ids = self.tokenizer(text, return_tensors="pt").input_ids.to(

self.model.device

)

gen_tokens = self.model.generate(

input_ids,

temperature=0.9,

max_length=200,

)

return pd.DataFrame(

self.tokenizer.batch_decode(gen_tokens), columns=["responses"]

)

async def __call__(self, http_request: Request) -> str: #异步实现http 框架

json_request: str = await http_request.json()

print(json_request)

prompt=json_request[0]

return self.generate(prompt["text"])

deployment = PredictDeployment.bind(model_id="huggyllama/llama-13b")

运行>> serve run test:deployment #后台启动的 后台关闭指令: serve shutdown

测试:

import requests

sample_input = {"text": "Funniest joke ever:"}

output = requests.post("http://localhost:8000/", json=[sample_input]).json()

print(output)

- 安装教程

pip install ray

依赖环境:https://github.com/ray-project/ray - 相关文档、案例阅读

网址: https://github.com/ray-project/ray

文档-案例:https://docs.ray.io/en/latest/serve/index.html