Graph-based learning

(This notebook is partly based on a workshop by Robert Peach and Mauricio Barahona: https://github.com/peach-lucien/networks_workshop/tree/main)

Here, we will go through an analysis of the Caenorhabditis elegans (C. elgans) connectome. C. elegans is the only organism for which the wiring diagram of its complete nervous system has been mapped with reasonable accuracy at the cellular level. Despite this structural information, which has been available for decades, it still proves difficult to understand the system, e.g, resolving the functional involvement of specific neurons in defined behavioural responses.

We will use data that Robert Peach has reconstructed from the following article with the inclusion of muscles https://www.nature.com/articles/nature24056. The connectome is composed of directed connections from one neuron to another neuron in accordance with their biological influence.

Each node falls into one of four categories:

- Sensory neurons (S)

- Inter neurons (I)

- Motor neurons (M)

- Muscles (U)

Therefore, we can model the nematode nervous system as a directed network whose nodes include neurons and muscles, and whose links represent the electrical and chemical synaptic connections between them, including neuromuscular junctions. The weights of the edges correspond to the number of synaptic connections between a pair of neurons.

import matplotlib

import matplotlib.pyplot as plt

import numpy as np

import numpy.testing as npt

Pre-processing C. Elegans network data

We are provided with an adjacency matrix A raw A_\text{raw} Araw of the C. Elgans network and metadata for the nodes (including neuron type, name and neuron class).

import pandas as pd

from google.colab import files

# upload data: select `celegans_adjacency_transposed.csv` and `celegans_nodedata.csv` for uploading.

upload = files.upload()

# import adjacency matrix

A_raw = pd.read_csv("celegans_adjacency_transposed.csv", index_col=0)

A_raw = A_raw.to_numpy()

# import node data

node_data = pd.read_csv("celegans_nodedata.csv", index_col=0)

<input type="file" id="files-563ef582-60ea-4369-bc5e-538e50630b68" name="files[]" multiple disabled

style="border:none" />

<output id="result-563ef582-60ea-4369-bc5e-538e50630b68">

Upload widget is only available when the cell has been executed in the

current browser session. Please rerun this cell to enable.

</output>

<script>// Copyright 2017 Google LLC

//

// Licensed under the Apache License, Version 2.0 (the “License”);

// you may not use this file except in compliance with the License.

// You may obtain a copy of the License at

//

// http://www.apache.org/licenses/LICENSE-2.0

//

// Unless required by applicable law or agreed to in writing, software

// distributed under the License is distributed on an “AS IS” BASIS,

// WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

// See the License for the specific language governing permissions and

// limitations under the License.

/**

- @fileoverview Helpers for google.colab Python module.

*/

(function(scope) {

function span(text, styleAttributes = {}) {

const element = document.createElement(‘span’);

element.textContent = text;

for (const key of Object.keys(styleAttributes)) {

element.style[key] = styleAttributes[key];

}

return element;

}

// Max number of bytes which will be uploaded at a time.

const MAX_PAYLOAD_SIZE = 100 * 1024;

function _uploadFiles(inputId, outputId) {

const steps = uploadFilesStep(inputId, outputId);

const outputElement = document.getElementById(outputId);

// Cache steps on the outputElement to make it available for the next call

// to uploadFilesContinue from Python.

outputElement.steps = steps;

return _uploadFilesContinue(outputId);

}

// This is roughly an async generator (not supported in the browser yet),

// where there are multiple asynchronous steps and the Python side is going

// to poll for completion of each step.

// This uses a Promise to block the python side on completion of each step,

// then passes the result of the previous step as the input to the next step.

function _uploadFilesContinue(outputId) {

const outputElement = document.getElementById(outputId);

const steps = outputElement.steps;

const next = steps.next(outputElement.lastPromiseValue);

return Promise.resolve(next.value.promise).then((value) => {

// Cache the last promise value to make it available to the next

// step of the generator.

outputElement.lastPromiseValue = value;

return next.value.response;

});

}

/**

- Generator function which is called between each async step of the upload

- process.

- @param {string} inputId Element ID of the input file picker element.

- @param {string} outputId Element ID of the output display.

- @return {!Iterable<!Object>} Iterable of next steps.

/

function uploadFilesStep(inputId, outputId) {

const inputElement = document.getElementById(inputId);

inputElement.disabled = false;

const outputElement = document.getElementById(outputId);

outputElement.innerHTML = ‘’;

const pickedPromise = new Promise((resolve) => {

inputElement.addEventListener(‘change’, (e) => {

resolve(e.target.files);

});

});

const cancel = document.createElement(‘button’);

inputElement.parentElement.appendChild(cancel);

cancel.textContent = ‘Cancel upload’;

const cancelPromise = new Promise((resolve) => {

cancel.onclick = () => {

resolve(null);

};

});

// Wait for the user to pick the files.

const files = yield {

promise: Promise.race([pickedPromise, cancelPromise]),

response: {

action: ‘starting’,

}

};

cancel.remove();

// Disable the input element since further picks are not allowed.

inputElement.disabled = true;

if (!files) {

return {

response: {

action: ‘complete’,

}

};

}

for (const file of files) {

const li = document.createElement(‘li’);

li.append(span(file.name, {fontWeight: ‘bold’}));

li.append(span(

(${file.type || 'n/a'}) - ${file.size} bytes, +

last modified: ${ file.lastModifiedDate ? file.lastModifiedDate.toLocaleDateString() : 'n/a'} - ));

const percent = span(‘0% done’);

li.appendChild(percent);

outputElement.appendChild(li);

const fileDataPromise = new Promise((resolve) => {

const reader = new FileReader();

reader.onload = (e) => {

resolve(e.target.result);

};

reader.readAsArrayBuffer(file);

});

// Wait for the data to be ready.

let fileData = yield {

promise: fileDataPromise,

response: {

action: 'continue',

}

};

// Use a chunked sending to avoid message size limits. See b/62115660.

let position = 0;

do {

const length = Math.min(fileData.byteLength - position, MAX_PAYLOAD_SIZE);

const chunk = new Uint8Array(fileData, position, length);

position += length;

const base64 = btoa(String.fromCharCode.apply(null, chunk));

yield {

response: {

action: 'append',

file: file.name,

data: base64,

},

};

let percentDone = fileData.byteLength === 0 ?

100 :

Math.round((position / fileData.byteLength) * 100);

percent.textContent = `${percentDone}% done`;

} while (position < fileData.byteLength);

}

// All done.

yield {

response: {

action: ‘complete’,

}

};

}

scope.google = scope.google || {};

scope.google.colab = scope.google.colab || {};

scope.google.colab._files = {

_uploadFiles,

_uploadFilesContinue,

};

})(self);

Saving celegans_adjacency_transposed.csv to celegans_adjacency_transposed.csv

Saving celegans_nodedata.csv to celegans_nodedata.csv

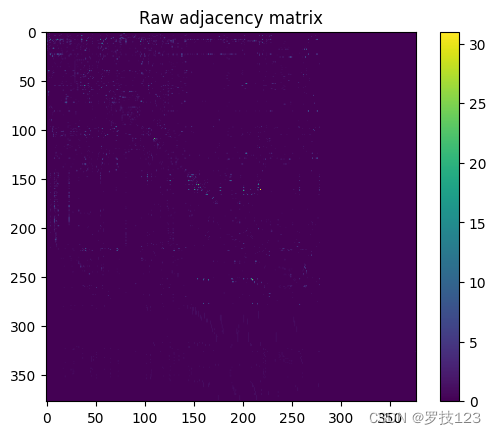

We can first plot A raw A_\text{raw} Araw as a heatmap and observe that the matrix is very sparse with values ranging from 0 to over 30.

fig, ax = plt.subplots(1)

im = ax.imshow(A_raw)

plt.colorbar(im)

ax.set(title="Raw adjacency matrix")

plt.show()

Arguably, this visualisation of the adjacency matrix is not very illuminating. We will discuss better ways to visualise our data below. Let’s first review the node metadata.

# look up node data

node_data.head()

| type | name | neuron_class | |

|---|---|---|---|

| 0 | I | ADAR | ADA |

| 1 | I | ADAL | ADA |

| 2 | S | ADFL | ADF |

| 3 | S | ASHL | ASH |

| 4 | I | AVDR | AVD |

</button>

<script>

const buttonEl =

document.querySelector('#df-8610b87f-def7-4b8b-be64-008cdb886b7b button.colab-df-convert');

buttonEl.style.display =

google.colab.kernel.accessAllowed ? 'block' : 'none';

async function convertToInteractive(key) {

const element = document.querySelector('#df-8610b87f-def7-4b8b-be64-008cdb886b7b');

const dataTable =

await google.colab.kernel.invokeFunction('convertToInteractive',

[key], {});

if (!dataTable) return;

const docLinkHtml = 'Like what you see? Visit the ' +

'<a target="_blank" href=https://colab.research.google.com/notebooks/data_table.ipynb>data table notebook</a>'

+ ' to learn more about interactive tables.';

element.innerHTML = '';

dataTable['output_type'] = 'display_data';

await google.colab.output.renderOutput(dataTable, element);

const docLink = document.createElement('div');

docLink.innerHTML = docLinkHtml;

element.appendChild(docLink);

}

</script>

<svg xmlns=“http://www.w3.org/2000/svg” height="24px"viewBox=“0 0 24 24”

width=“24px”>

We encode the node types with numerical values.

# transform node type into integers

type_to_value = {"S" : 0, "I" : 1, "M" : 2, "U" : 3}

value_to_type = {0 : "S", 1: "I", 2 : "M", 3 : "U"}

node_type = node_data["type"].map(type_to_value)

node_type = np.asarray(node_type)

Let us check some basic properties of the graph, in particular whether is undirected and unweighted.

# check properties of graph

print("Graph is undirected:", np.array_equal(A_raw,A_raw.T))

print("Graph is unweighted:", np.array_equal(np.unique(A_raw),np.arange(2)))

Graph is undirected: False

Graph is unweighted: False

To simply our analysis below, we go from the raw adjacency matrix A raw A_\text{raw} Araw to the adjacency matrix A A A of an undirected and unweighted graph, where A i j = A j i = 1 A_{ij}=A_{ji}=1 Aij=Aji=1 if either A raw , i j > 0 A_{\text{raw}, ij}>0 Araw,ij>0 or A raw , j i > 0 A_{\text{raw}, ji}>0 Araw,ji>0 and A i j = A j i = 0 A_{ij}=A_{ji}=0 Aij=Aji=0 otherwise.

## EDIT THIS CELL

# we go to undirected unweighted graph

A = np.zeros_like(A_raw) # <-- SOLUTION

A[(A_raw + A_raw.T) > 0] = 1 # <-- SOLUTION

# double check if graph is now undirected and unweighted

print("Graph is undirected:", np.array_equal(A,A.T))

print("Graph is unweighted:", np.array_equal(np.unique(A),np.arange(2)))

Graph is undirected: True

Graph is unweighted: True

Degree distribution of undirected C. Elegans network

Let us now compute the number of nodes and eges of our undirected unweighted graph given by A A A.

## EDIT THIS CELL

# compute number of nodes

N = A_raw.shape[0] # <-- SOLUTION

print("Number of nodes:", N)

# compute number of edges

M = int(A.sum()/2) # <-- SOLUTION

print("Number of edges:", M)

Number of nodes: 377

Number of edges: 2850

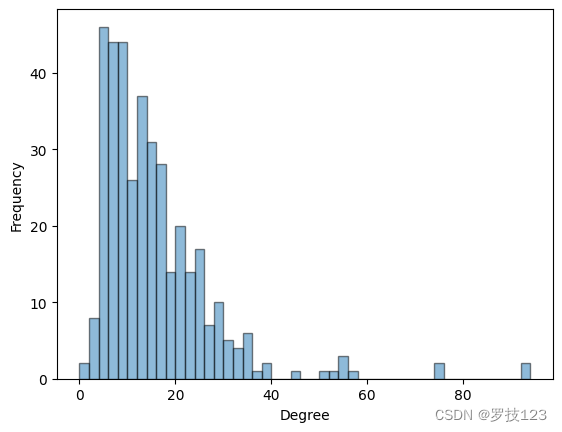

As a first step of our analysis we look at the degree distribution d = A 1 \boldsymbol{d}=A\boldsymbol{1} d=A1.

## EDIT THIS CELL

# compute degrees

d = A.sum(axis=0) # <-- SOLUTION

# visualise degree distributions

fig, ax = plt.subplots(1)

bins = np.arange(0,d.max()+2,2)

ax.hist(d, bins=bins,alpha=0.5, edgecolor='black')

ax.set(xlabel="Degree",ylabel="Frequency")

plt.show()

Let us look up the names and types two highest degree nodes.

## EDIT THIS CELL

# check which node has highest degree

node1 = node_data.iloc[np.argsort(-d)[0]] # <-- SOLUTION

print(f"Highest degree neuron is {node1['name']} of type {node1['type']}.") # <-- SOLUTION

# check which node has second highest degree

node2 = node_data.iloc[np.argsort(-d)[1]] # <-- SOLUTION

print(f"Second highest degree neuron is {node2['name']} of type {node2['type']}.") # <-- SOLUTION

Highest degree neuron is AVAR of type I.

Second highest degree neuron is AVAL of type I.

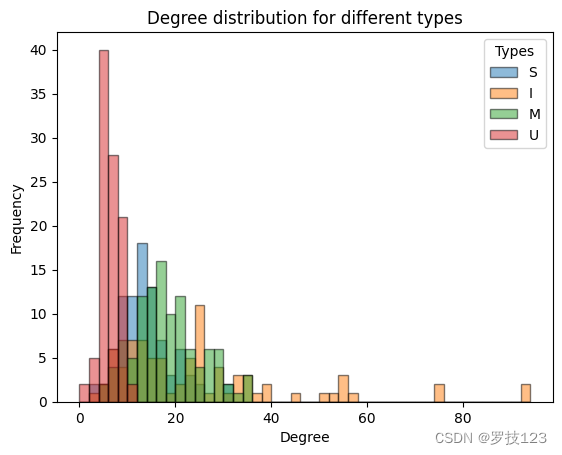

We notice that the two highest degree nodes are both of the same type inter neuron type (I). This suggests that it will be instructive to plot the degree distribution for the four different types of nodes.

# visualise degree distributions for the four different types

fig, ax = plt.subplots(1)

bins = np.arange(0,d.max()+2,2)

ax.hist(d[node_type==0], bins=bins,alpha=0.5, edgecolor='black', label=r"S")

ax.hist(d[node_type==1], bins=bins,alpha=0.5, edgecolor='black', label=r"I")

ax.hist(d[node_type==2], bins=bins,alpha=0.5, edgecolor='black', label=r"M")

ax.hist(d[node_type==3], bins=bins,alpha=0.5, edgecolor='black', label=r"U")

ax.set(xlabel="Degree",ylabel="Frequency",title="Degree distribution for different types")

ax.legend(title="Types")

plt.show()

From the degree distrubtions we can observe that the inter neurons (I) seem to have a higher degree, i.e., they are most connected, and the muscles (U) are the least connected in the nervous system.

We will define node colours consistent with this plot to use for later.

# we define node colours consistent with the plot above

cmap = matplotlib.colormaps.get_cmap('tab10')

color_type = [cmap(i) for i in node_type]

Spectral analysis of symmetric normalised Laplacian

We focus our analysis on the spectrum of the normalised graph Laplacian L sym L_\text{sym} Lsym, which is given by:

L sym = D − 1 / 2 L D − 1 / 2 , L_\text{sym}=D^{-1/2} L D^{-1/2}, Lsym=D−1/2LD−1/2,

where L = D − A L=D-A L=D−A is the combinatorial Laplacian and D D D the diagonal degree matrix.

## EDIT THIS CELL

# define diagonal degree matrix

D = np.diag(d) # <-- SOLUTION

# compute combinatorial Laplacian

L = D - A # <-- SOLUTION

# compute square root of inverse diagonal degree matrix

D_sqrt_inv = np.diag(1/np.sqrt(d)) # <-- SOLUTION

# compute symmetric normalised Laplacian

L_s = D_sqrt_inv @ L @ D_sqrt_inv # <-- SOLUTION

We are now interested in the spectral decomposition of L sym L_\text{sym} Lsym. We know that the first (smallest) eigenvalue is λ 1 = 0 \lambda_1=0 λ1=0 with corresponding eigenvector v 1 = D 1 / 2 1 \boldsymbol{v}_1=D^{1/2}\boldsymbol{1} v1=D1/21 (can you prove this?).

# the square-root of the degree vector is the zero eigenvector to eigenvalue 0

np.allclose(L_s @ np.sqrt(d),np.zeros(N))

True

To compute the full spectrum of L sym L_\text{sym} Lsym, we use the fact that it is a real symmetric matrix and sort the eigenvalues (and corresponding eigenvectors) in ascending order.

## EDIT THIS CELL

# compute eigen decomposition using the fact that

eigenvals, eigenvecs = np.linalg.eigh(L_s) # <-- SOLUTION

# sort eigenvalues and corresponding eigenvector

eigenvecs = eigenvecs.T[np.argsort(eigenvals)] # <-- SOLUTION

eigenvals = np.sort(eigenvals) # <-- SOLUTION

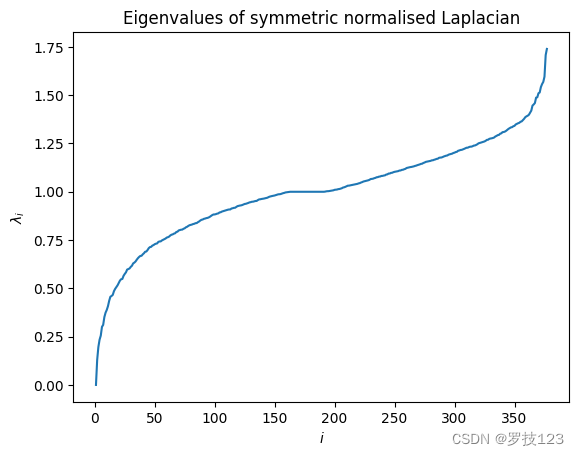

We can visualise the spectrum of L sym L_\text{sym} Lsym.

# plot eigenvalues

fig, ax = plt.subplots(1)

ax.plot(np.arange(1,N+1),eigenvals)

ax.set(title="Eigenvalues of symmetric normalised Laplacian",xlabel=r"$i$",ylabel=r"$\lambda_i$")

plt.show()

We confirm that the first eigenvalue is λ 1 = 0 \lambda_1=0 λ1=0, but we are more interested in the second eigenvalue λ 2 > 0 \lambda_2>0 λ2>0, which is also called the algebraic connectivity.

## EDIT THIS CELL

# print first and second eigenvalues

print("First eigenvalue:", round(eigenvals[0],2)) # <-- SOLUTION

print("Second eigenvalue:", round(eigenvals[1],2)) # <-- SOLUTION

First eigenvalue: -0.0

Second eigenvalue: 0.13

As λ 2 \lambda_2 λ2, is small we expect that the graph has a good bipartition. We will study this later.

Graph visualisation using Laplacian eigenmaps

But first we will use the second (Fiedler) eigenvector v 2 \boldsymbol{v}_2 v2 and third eigengenvector v 3 \boldsymbol{v}_3 v3 to visualise the network. Using these two Laplacian eigenvectors (also called Laplacian eigenmaps) as x \boldsymbol{x} x and y \boldsymbol{y} y coordinates of the nodes gives us a good two-dimensional representation of the graph (a spectral embedding). We can improve the visualisation when normalising the coordinates such that x = D − 1 / 2 v 2 \boldsymbol{x}=D^{-1/2}\boldsymbol{v}_2 x=D−1/2v2 and y = D − 1 / 2 v 3 \boldsymbol{y}=D^{-1/2}\boldsymbol{v}_3 y=D−1/2v3.

## EDIT THIS CELL

# get second (Fiedler) and third eigenvectors

v2 = eigenvecs[1] # <-- SOLUTION

v3 = eigenvecs[2] # <-- SOLUTION

# normalise coordinates

x = D_sqrt_inv @ v2 # <-- SOLUTION

y = D_sqrt_inv @ v3 # <-- SOLUTION

We can simply plot the graph by drawing lines between the embeddings of two connected nodes. Additionally, we can scale the node size dependent on the degree.

# plot

fig, ax = plt.subplots(1)

# plot edges

for i in range(N):

for j in range(i+1,N):

if A[i,j] > 0:

ax.plot([x[i],x[j]],[y[i],y[j]], color="black", alpha=0.5, linewidth=0.3)

# plot nodes

scatter = ax.scatter(x,y,s=0.2*d, c=color_type, zorder=10)

# create legend for node types

types_legend = [plt.Line2D([0], [0], marker='o', color='w', label=label, markerfacecolor=cmap(value)) for value, label in value_to_type.items()]

plt.legend(handles=types_legend, title='Types')

# set labels

ax.set(xlabel="Normalised Fiedler eigenvector", ylabel="Normalised third eigenvector")

plt.show()

We observe that the muscles (U) are located in the periphery of the network, while the most connected inter neurons (I) are located in the center.

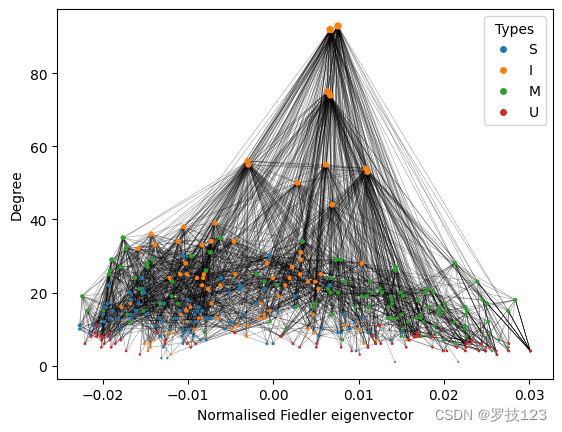

Another way to visualise the graph is to directly use the node degree d \boldsymbol{d} d as the y-coordinate, i.e., y = d \boldsymbol{y}=\boldsymbol{d} y=d.

# plot

fig, ax = plt.subplots(1)

# plot edges

for i in range(N):

for j in range(i+1,N):

if A[i,j] > 0:

ax.plot([x[i],x[j]],[d[i],d[j]], color="black", alpha=0.5, linewidth=0.3)

# plot nodes

scatter = ax.scatter(x,d,s=0.2*d, c=color_type, zorder=10)

# plot legend

plt.legend(handles=types_legend, title='Types')

# plot labels

ax.set(xlabel="Normalised Fiedler eigenvector", ylabel="Degree")

plt.show()

This visualisation gives a more decluttered picture and is very useful to distinguish the different node types in the network. For the rest of the notebook, we will thus use this visualisation.

As you have learnt by now, graph visualisation is a very interesting topic in itself and there are many algorithms to plot various graphs, including very large ones.

Questions:

- Why is graph visualisation not unique?

- Can you come up with alternative graph visualisations?

Bipartitioning using Fiedler eigenvector

We will now use the second (Fiedler) eigenvector v 2 \boldsymbol{v}_2 v2 to bipartition the network into two communities, given by the sign of v 2 \boldsymbol{v}_2 v2.

## EDIT THIS CELL

# bipartition is given by sign of Fiedler eigenvector

bipartition = np.asarray(v2>0, dtype=int) # <-- SOLUTION

As the algebraic connectivity λ 2 \lambda_2 λ2 is small, we expect that the bipartition is very balanced. To confirm this we compute the number of nodes and the total degree of both communities.

## EDIT THIS CELL

# compute statistics for bipartition

print("Size of first community:", np.sum(bipartition==0)) # <-- SOLUTION

print("Total degree of first community:", np.sum(d[bipartition==0])) # <-- SOLUTION

print("\nSize of second community:", np.sum(bipartition==1)) # <-- SOLUTION

print("Total degree of second community:", np.sum(d[bipartition==1])) # <-- SOLUTION

Size of first community: 181

Total degree of first community: 2913

Size of second community: 196

Total degree of second community: 2787

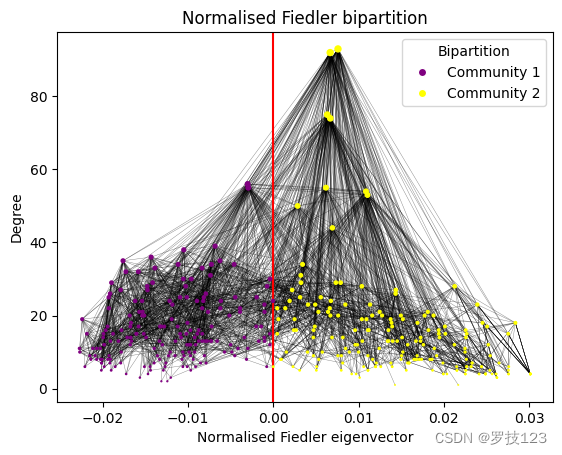

Indeed, we find that the communities of the bipartition contain a very similar number of nodes and also have a similar total degree. It is illustrative to visualise the bipartition in the network.

# use different colours for bipartition

cmap_binary = {0 : "purple", 1 : "yellow"}

color_bipartition = [cmap_binary[i] for i in bipartition]

# plot

fig, ax = plt.subplots(1)

# plot edges

for i in range(N):

for j in range(i+1,N):

if A[i,j] > 0:

ax.plot([x[i],x[j]],[d[i],d[j]], color="black", alpha=0.5, linewidth=0.3)

# plot nodes

scatter = ax.scatter(x,d,s=0.2*d, c=color_bipartition, zorder=10)

# plot cut induced by Fiedler eigenvector

ax.axvline(x=0, color = "red")

# create legend for node types

bipartition_legend = [plt.Line2D([0], [0], marker='o', color='w', label="Community 1", markerfacecolor="purple"),

plt.Line2D([0], [0], marker='o', color='w', label="Community 2", markerfacecolor="yellow")]

plt.legend(handles=bipartition_legend, title='Bipartition')

# plot labels

ax.set(xlabel="Normalised Fiedler eigenvector", ylabel="Degree", title="Normalised Fiedler bipartition")

plt.show()

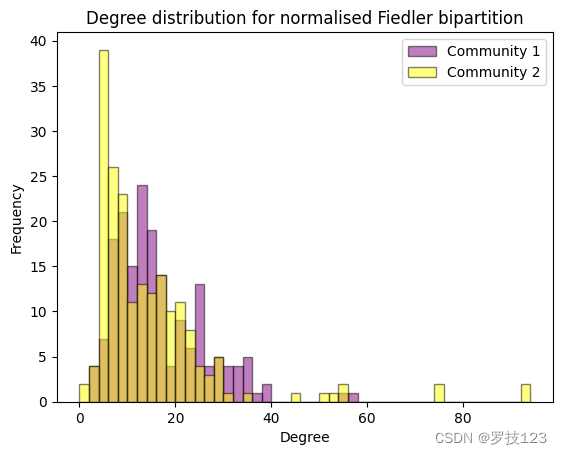

While the nodes with the highest degree belong to community 2, the degree distribution seems to be quite uniform between the two communities. We confirm this by plotting the degree distributions as histograms.

# visualise degree distributions for both communities

fig, ax = plt.subplots(1)

bins = np.arange(0,d.max()+2,2)

ax.hist(d[bipartition==0], bins=bins,alpha=0.5, edgecolor='black', label=r"Community 1", color="purple")

ax.hist(d[bipartition==1], bins=bins,alpha=0.5, edgecolor='black', label=r"Community 2", color="yellow")

ax.set(xlabel="Degree",ylabel="Frequency",title="Degree distribution for normalised Fiedler bipartition")

ax.legend()

plt.show()

Spectral clustering using more Laplacian eigenvectors

To go beyond bipartitions, we can use more Laplacian eigenvectors and apply what is called spectral clustering, where we apply k k k-means clustering to the Laplacian eigenmaps (see Section 12.4.2.1 in the lecture notes).

Code for k-means clustering from Week 7

To use k k k-means clustering we first copy-paste the code from Week 7.

def compute_within_distance(centroids, X, labels):

"""

Compute the within-cluster distance.

Args:

centroids (np.ndarray): the centroids array, with shape (k, p).

X (np.ndarray): the samples array, with shape (N, p).

labels (np.ndarray): the cluster index of each sample, with shape (N,).

Retruns:

(float): the within-cluster distance.

"""

within_distance = 0.0

k, p = centroids.shape

for l in range(len(centroids)):

centroid = centroids[l]

# Applying aggregate computations on `NaN` values

# can propagate the `NaN` to the results.

# In this case we skip the `NaN` centroid,

# which is effectively of an empty cluster.

if np.isnan(centroid).any():

continue

# Select samples belonging to label=l.

X_cluster = X[labels == l]

# EDIT THE NEXT LINES

# You need to add the `X_cluster` contribution to `within_distance`

# 1. Compute the cluster contribution.

cluster_se = (X_cluster - centroid)**2

assert cluster_se.shape == (len(X_cluster), p)

# 2. Accumulate

within_distance += np.sum(cluster_se)

return within_distance

def compute_centroids(k, X, labels):

"""

Compute the centroids of the clustered points X.

Args:

k (int): total number of clusters.

X (np.ndarray): data points, with shape (N, p)

labels (np.ndarray): cluster assignments for each sample in X, with shape (N,).

Returns:

(np.ndarray): the centroids of the k clusters, with shape (k, p).

"""

N, p = X.shape

centroids = np.zeros((k, p))

for label in range(k):

cluster_X_l = X[labels == label]

centroids[label] = cluster_X_l.mean(axis=0)

return centroids

def kmeans_assignments(centroids, X):

"""

Assign every example to the index of the closest centroid.

Args:

centroids (np.ndarray): The centroids of the k clusters, shape: (k, p).

X (np.ndarray): The samples array, shape (N, p).

Returns:

(np.ndarray): an assignment matrix to k clusters, by their indices.

"""

k, p = centroids.shape

N, _ = X.shape

# Compute distances between data points and centroids. Assumed shape: (k, N).

distances = np.vstack([np.linalg.norm(X - c, axis=1) for c in centroids])

# Note: If any centroid has NaN, the NaN value will propagate into the

# distance corresponding row, we need to skip that row next when we search

# for the closest centroid.

assert distances.shape == (k, N), f"Unexpected shape {distances.shape} != {(k, N)}"

# Assignments are computed by finding the centroid with the minimum distance

# for each sample. The np.nanargmin returns the index of the minimum values

# in `distances` scanning the rows (axis=0) for each column,

# while skipping any nan value found.

return np.nanargmin(distances, axis=0)

def kmeans_clustering(X, k,

max_iters=1000,

epsilon=0.0,

callback=None):

"""

Apply k-means clustering algorithm on the samples in `X` to generate

k clusters.

Args:

X (np.ndarray): The samples array, shape: (N, p).

k (int): The number of clusters.

max_iters (int): Maximum number of iterations.

epsilon (float): The minimum change in the within-distance to continue.

callback (Callable): a function to be called on the assignments,

the centroids, and within-distance after each iteration, default is None.

Returns:

Tuple[np.ndarray, np.ndarray]: the assignments array to k clusters with

shape (N,) and the centroids array

"""

# Step 1: randomly initialise the cluster assignments.

labels = np.random.choice(k, size=len(X), replace=True)

within_distance = np.inf

for _ in range(max_iters):

# Step 2: compute the centroids

centroids = compute_centroids(k, X, labels)

if callback:

callback(labels, centroids)

# Step 3: reassignments.

new_labels = kmeans_assignments(centroids, X)

_within_distance = compute_within_distance(centroids, X, labels)

# Step 4: repeat (2) and (3) until a termination condition.

if all(labels == new_labels) or abs(_within_distance - within_distance) < epsilon:

break

labels = new_labels

within_distance = _within_distance

return labels, centroids, within_distance

def kmeans_clustering_multi_runs(X, k, max_iters=100,

epsilon=0.0,

n_runs=100, seed=0):

"""

Perform multiple runs (with different initialisations) of kmeans algorithm

and return the best clustering using the within-cluster distance.

Args:

X (np.ndarray): The samples array, shape (N, p).

k (int): The number of clusters.

max_iters (int): Maximum iterations of kmeans algorithm.

epsilon (float): The convergence threshold of kmeans algorithm.

n_runs (int): The number of runs of kmeans with different initialisations.

seed (int): A seed value before starting the n_runs loop.

Returns:

Tuple[np.ndarray, ...]: A tuple that encapsulates (labels, centroids,

intermediate clustering, within-cluster distance) of the best clusetering

that minimises the within-cluster distance along the n_runs.

"""

# We fix the seed once before starting the n_runs.

np.random.seed(seed)

min_within_distance = np.inf

best_clustering = (None, None, None)

for _ in range(n_runs):

intermediates = []

# Our callback stores all the intermediate labels and centroids

# in case we need them for debugging and visualisations.

callback = lambda labels, centroids: intermediates.append((labels, centroids))

labels, centroids, wd = kmeans_clustering(X, k=k, max_iters=max_iters,

epsilon=epsilon,

callback=callback)

if wd < min_within_distance:

best_clustering = labels, centroids, intermediates

min_within_distance = wd

labels, centroids, intermediates = best_clustering

return labels, centroids, intermediates, wd

Using k-means for spectral clustering

Let us define a N × r N\times r N×r feature matrix X X X that contains the first r > 0 r>0 r>0 Laplacian eigenvectors (corresponding to non-zero eigenvalues) as columns. For spectral clustering, it is recommended to first obtain a normalised feature matrix Y Y Y from X X X following the famous paper by Ng, Jordan, and Weiss 2001:

Y i j = X i j ∑ j = 1 r X i j 2 , Y_{ij}=\frac{X_{ij}}{\sqrt{\sum_{j=1}^r X_{ij}^2}}, Yij=∑j=1rXij2Xij,

such that the rows of Y Y Y have unit lengths. We compute Y Y Y for r = 10 r=10 r=10.

## EDIT THIS CELL

# stack first r eigenvectors in columns to get spectral embeddings

r = 10

X = eigenvecs[1:r+1].T # <-- SOLUTION

# normalise to unit length

Y = np.diag(1/np.sqrt(np.sum(np.square(X), axis=1))) @ X # <-- SOLUTION

Treating each row of Y Y Y as a point in R r \mathbb{R}^r Rr gives us r r r-dimensional embeddings for the nodes in the network. We can then cluster the network using k k k-means. Our question is whether we can retrieve the four node types using spectral clustering, so we set k = 4 k=4 k=4.

## EDIT THIS CELL

# apply k-means clustering to spectral embeddings

spectral_partition, _, _, _ = kmeans_clustering_multi_runs(Y, 4) # <-- SOLUTION

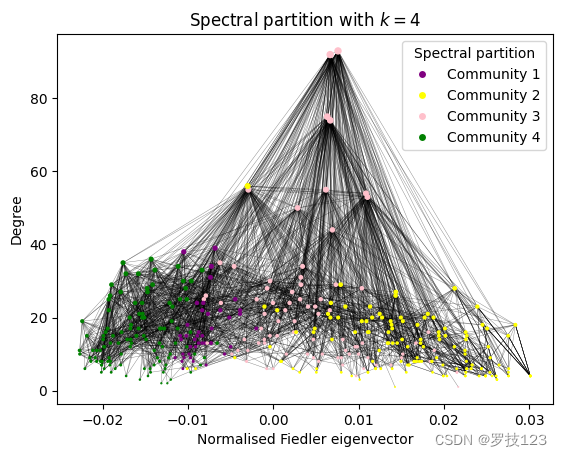

Already through visualising the partition, it seems like there is a low correspondence to the node types when compared to the visualsiations above.

# use different colours for spectral partition

cmap_partition = {0 : "purple", 1 : "yellow", 2 : "pink", 3 : "green"}

color_partition = [cmap_partition[i] for i in spectral_partition]

# plot

fig, ax = plt.subplots(1)

# plot edges

for i in range(N):

for j in range(i+1,N):

if A[i,j] > 0:

ax.plot([x[i],x[j]],[d[i],d[j]], color="black", alpha=0.5, linewidth=0.3)

# plot nodes

scatter = ax.scatter(x,d,s=0.2*d, c=color_partition, zorder=10)

# create legend for node types

partition_legend = [plt.Line2D([0], [0], marker='o', color='w', label="Community 1", markerfacecolor="purple"),

plt.Line2D([0], [0], marker='o', color='w', label="Community 2", markerfacecolor="yellow"),

plt.Line2D([0], [0], marker='o', color='w', label="Community 3", markerfacecolor="pink"),

plt.Line2D([0], [0], marker='o', color='w', label="Community 4", markerfacecolor="green")]

plt.legend(handles=partition_legend, title='Spectral partition')

# plot labels

ax.set(xlabel="Normalised Fiedler eigenvector", ylabel="Degree", title=r"Spectral partition with $k=4$")

plt.show()

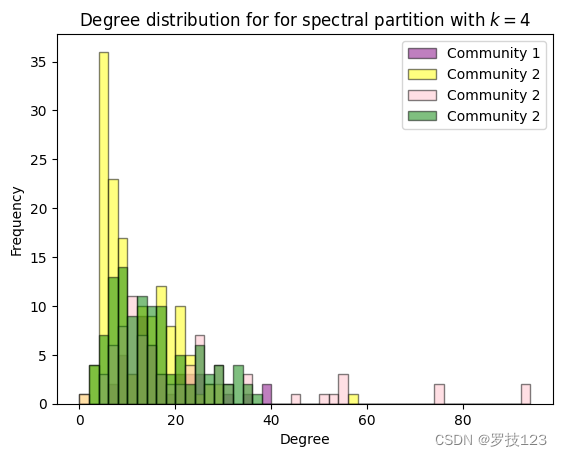

We also observe that the degree distributions of the different clusters in the spectral partition are very similar, so unlike the degree distributions according to the different node types discussed above.

# visualise degree distributions for the four spectral communities

fig, ax = plt.subplots(1)

bins = np.arange(0,d.max()+2,2)

ax.hist(d[spectral_partition==0], bins=bins,alpha=0.5, edgecolor='black', label=r"Community 1", color="purple")

ax.hist(d[spectral_partition==1], bins=bins,alpha=0.5, edgecolor='black', label=r"Community 2", color="yellow")

ax.hist(d[spectral_partition==2], bins=bins,alpha=0.5, edgecolor='black', label=r"Community 2", color="pink")

ax.hist(d[spectral_partition==3], bins=bins,alpha=0.5, edgecolor='black', label=r"Community 2", color="green")

ax.set(xlabel="Degree",ylabel="Frequency",title="Degree distribution for for spectral partition with $k=4$")

ax.legend()

plt.show()

However, it is expected that the spectral partition is not consistent with the partition of node types because it actually reflects the physical positions of the neurons in the organism (we can’t show this here). This is the case because the spectral partition is obtained from the Laplacian that encodes the connectivity of neurons in a physical organism.

Using Normalised Variation of Information for comparing partitions

We implement the Normalised Variation of Information (NVI) to compare the spectral partition with the node types (which constitutes an alternative partition of the network), see Section 10.7 in the updated lecture notes. For two partitions P 1 \mathcal{P}_1 P1 and P 2 \mathcal{P}_2 P2 you can compute the entropies E ( P 1 ) E(\mathcal{P}_1) E(P1) and E ( P 2 ) E(\mathcal{P}_2) E(P2) and the mutual information M I ( P 1 , P 2 ) MI(\mathcal{P}_1,\mathcal{P}_2) MI(P1,P2). The NVI is then given by:

N V I ( P 1 , P 2 ) = E ( P 1 ) + E ( P 2 ) − 2 M I ( P 1 , P 2 ) E ( P 1 ) + E ( P 2 ) − M I ( P 1 , P 2 ) NVI(\mathcal{P}_1,\mathcal{P}_2)=\frac{ E(\mathcal{P}_1)+E(\mathcal{P}_2)-2 MI(\mathcal{P}_1,\mathcal{P}_2)}{E(\mathcal{P}_1)+E(\mathcal{P}_2)- MI(\mathcal{P}_1,\mathcal{P}_2)} NVI(P1,P2)=E(P1)+E(P2)−MI(P1,P2)E(P1)+E(P2)−2MI(P1,P2)

The NVI is a metric on the space of partitions and ranges between 0 (partitions are the same) to 1.

## EDIT THIS CELL

def compute_NVI(partition_1,partition_2):

"""Computes NVI of two partitions.

Parameters:

partition_1 (np.array): Encoding for partition 1.

partition_2 (np.array): Encoding for partition 2.

Returns:

NVI (float): NVI of the two partitions.

"""

# check if partitions are defined on the same underlying space

assert len(partition_1) == len(partition_2), "Partition arrays must have same length"

# get number of points

N = len(partition_1)

# get communities as sets from partition 1

communities_1 = []

for index in np.unique(partition_1):

community = set(np.where(partition_1==index)[0])

communities_1.append(community)

# get communities as sets from partition 2

communities_2 = []

for index in np.unique(partition_2):

community = set(np.where(partition_2==index)[0])

communities_2.append(community)

# compute number of communities

n1 = len(communities_1)

n2 = len(communities_2)

# compute probabilities for the two partitions

p1 = np.asarray([len(community) for community in communities_1]) / N # <-- SOLUTION

p2 = np.asarray([len(community) for community in communities_2]) / N # <-- SOLUTION

# compute joint probabilities

p12 = np.zeros((n1,n2))

for i in range(n1):

for j in range(n2):

p12[i,j] = len(communities_1[i].intersection(communities_2[j]))/N # <-- SOLUTION

# compute entropy

E1 = - np.sum(p1 * np.log(p1)) # <-- SOLUTION

E2 = - np.sum(p2 * np.log(p2)) # <-- SOLUTION

# compute mutual information

MI = 0

for i in range(n1):

for j in range(n2):

if p12[i,j] > 0:

MI += p12[i,j] * np.log(p12[i,j]/(p1[i]*p2[j])) # <-- SOLUTION

# compute NVI

NVI = (E1 + E2 - 2 * MI) / (E1 + E2 - MI) # <-- SOLUTION

return NVI

You can check your implementation with the following cell.

# check for two test cases

partition_1 = np.asarray([0,0,0,1,1,1])

partition_2 = np.asarray([0,1,0,1,0,1])

npt.assert_allclose(compute_NVI(partition_1,partition_2), 0.9574079427713601)

partition_3 = np.asarray([3,2,0,1,1,1])

partition_4 = np.asarray([0,0,0,0,0,1])

npt.assert_allclose(compute_NVI(partition_3,partition_4), 0.9152282678756786)

We can now compute the NVI between the spectral partition and the node type.

## EDIT THIS CELL

print("NVI of spectral partition and node types:",

round(compute_NVI(node_type,spectral_partition),3)) # <-- SOLUTION

NVI of spectral partition and node types: 0.837

The NVI confirms our observations that the spectral partition is actually not very similar to the partition of node types.

We conclude here by double-checking some of the metric properties of the NVI.

# we can check that the NVI is symmetric

npt.assert_allclose(compute_NVI(spectral_partition,node_type), compute_NVI(node_type,spectral_partition))

# and that the distance of a partition to itself is zero

npt.assert_allclose(compute_NVI(spectral_partition,spectral_partition), 0, atol=1e-15)

npt.assert_allclose(compute_NVI(node_type,node_type), 0, atol=1e-15)

Analysing the directed C. Elegans network

As the node types I, S, M and U actually correspond to different functionalities of neurons in the organism, we expect that they play different “roles” in the network. We saw this already when looking at the degree distribution of the different types, where the inter neurons (I) had the the highest degrees.

We can improve our analysis by looking not only at the degree in the undirected network, but studing the in- and out-degrees in the directed C. Elegans network.

Let us start with compiling the directed unweighted C. Elegans network whose adjacency matrix we denote by B B B such that B i j = 1 B_{ij}=1 Bij=1 if A raw , i j > 0 A_{\text{raw}, ij}>0 Araw,ij>0 and B i j = 0 B_{ij}=0 Bij=0 otherwise.

## EDIT THIS CELL

# obtain directed adjacency matrix

B = np.zeros_like(A_raw) # <-- SOLUTION

B[(A_raw) > 0] = 1 # <-- SOLUTION

We can then compute the in- and out-degrees of B B B.

## EDIT THIS CELL

# compute in-degree

d_in = B @ np.ones(N) # <-- SOLUTION

# compute out-degree

d_out = B.T @ np.ones(N) # <-- SOLUTION

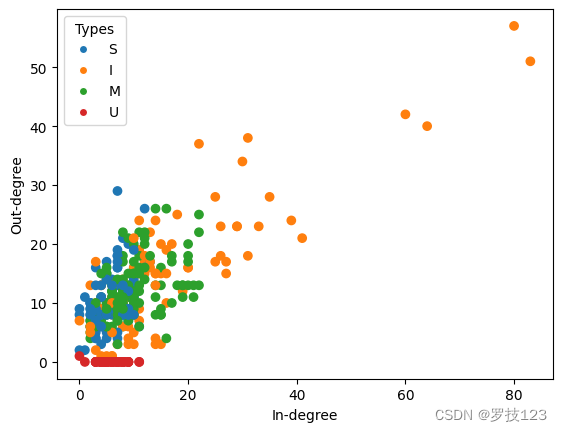

We now plot the neurons in a scatter plot with x-coordinate given by the in-degree and y-coordinate given by the out-degree.

# scatter plot in- and out-degrees

fig, ax = plt.subplots(1)

# plot nodes

scatter = ax.scatter(d_in, d_out, c=color_type, zorder=10)

# plot legend

plt.legend(handles=types_legend, title='Types')

# plot labels

ax.set(xlabel="In-degree", ylabel="Out-degree")

plt.show()

We can see that the combination of in- and out-degreens helps us to distinguish the different types. Most strikingly, the muscles have a very low out-degree (mostly 0) and only incoming nodes as they sit at the bottom of the hierarchy in the nervous system of C. Elegans.

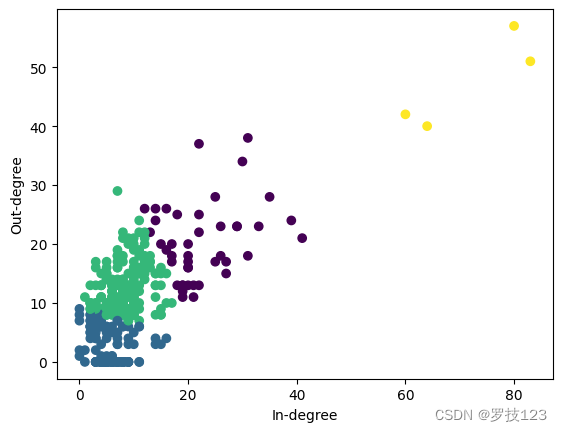

Although it doesn’t look very promising, we will apply k-means clustering to a feature matix Z Z Z that has the in- and out-degrees as columns.

## EDIT THIS CELL

# combine in- and out-degree to new feature matrix

Z = np.asarray([d_in,d_out]).T # <-- SOLUTION

# apply k-means clustering to in and out-degrees

in_out_partition, _, _, _ = kmeans_clustering_multi_runs(Z, 4) # <-- SOLUTION

<ipython-input-27-8846dba3c782>:57: RuntimeWarning: Mean of empty slice.

centroids[label] = cluster_X_l.mean(axis=0)

/usr/local/lib/python3.10/dist-packages/numpy/core/_methods.py:121: RuntimeWarning: invalid value encountered in divide

ret = um.true_divide(

We can visualise the obtained clustering in a scatter plot.

# scatter plot in- and out-degrees

fig, ax = plt.subplots(1)

# plot nodes

scatter = ax.scatter(d_in, d_out, c=in_out_partition, zorder=10)

# plot legend

# plt.legend(handles=types_legend, title='Types')

# plot labels

ax.set(xlabel="In-degree", ylabel="Out-degree")

plt.show()

To quantify the correspondence to the ground truth node types, we evaluate the NVI of the “in-degree out-degree partition” and the node types and observe a slightly lower value as compared to spectral clustering.

## EDIT THIS CELL

print("NVI of in-degree out-degree partition and node types:",

round(compute_NVI(node_type,in_out_partition),3)) # <-- SOLUTION

NVI of in-degree out-degree partition and node types: 0.826

The analysis of in-degree and out-degree patterns of nodes aims at recovering different roles in the network. If you want to read more on how to distinguish nodes in a graph based on in-coming and out-going paths you can have a look at a paper by Kathryn Cooper and Mauricio Barahona on Role-based similarity in directed networks: https://arxiv.org/abs/1012.2726