LangChainHub 的思路真的很好,通过Hub的方式将Prompt 共享起来,大家可以通过很方便的手段,短短的几行代码就可以使用共享的Prompt。

我个人非常看好这个项目。

官方推荐使用LangChainHub,但是它在GitHub已经一年没有更新了, 倒是数据还在更新。

安装依赖

pip install langchainhub

Prompt

为了防止大家不能访问,我这里先把用到的模板复制一份出来。

HUMAN

You are a helpful assistant. Help the user answer any questions.

You have access to the following tools:

{tools}

In order to use a tool, you can use <tool></tool> and <tool_input></tool_input> tags. You will then get back a response in the form <observation></observation>

For example, if you have a tool called 'search' that could run a google search, in order to search for the weather in SF you would respond:

<tool>search</tool><tool_input>weather in SF</tool_input>

<observation>64 degrees</observation>

When you are done, respond with a final answer between <final_answer></final_answer>. For example:

<final_answer>The weather in SF is 64 degrees</final_answer>

Begin!

Previous Conversation:

{chat_history}

Question: {input}

{agent_scratchpad}

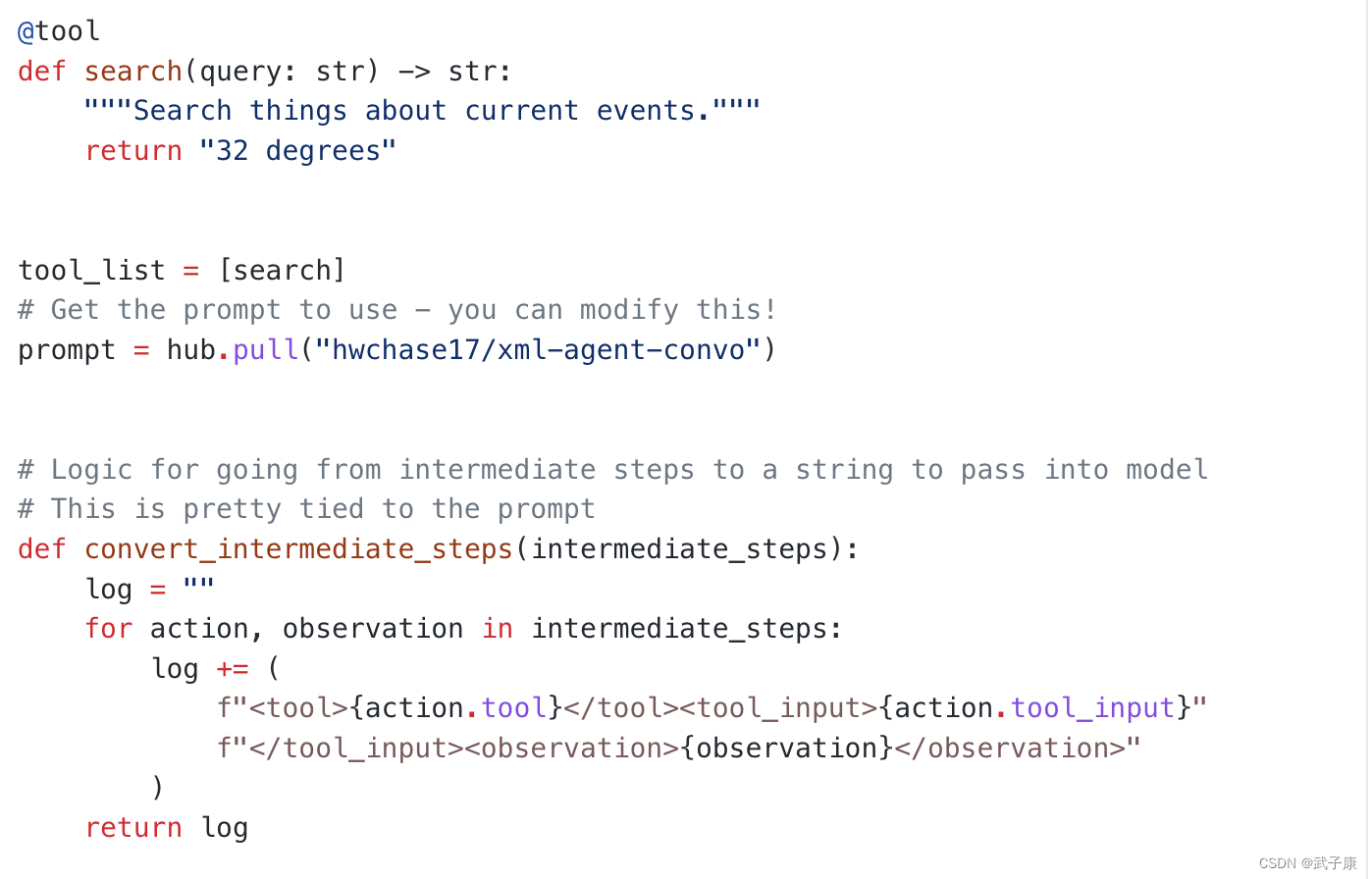

编写代码

代码主要部分是,定义了一个工具tool,让Agent执行,模拟了一个搜索引擎,让GPT利用工具对自身的内容进行扩展,从而完成复杂的任务。

from langchain import hub

from langchain.agents import AgentExecutor, tool

from langchain.agents.output_parsers import XMLAgentOutputParser

from langchain_openai import ChatOpenAI

model = ChatOpenAI(

model="gpt-3.5-turbo",

)

@tool

def search(query: str) -> str:

"""Search things about current events."""

return "32 degrees"

tool_list = [search]

# Get the prompt to use - you can modify this!

prompt = hub.pull("hwchase17/xml-agent-convo")

# Logic for going from intermediate steps to a string to pass into model

# This is pretty tied to the prompt

def convert_intermediate_steps(intermediate_steps):

log = ""

for action, observation in intermediate_steps:

log += (

f"<tool>{action.tool}</tool><tool_input>{action.tool_input}"

f"</tool_input><observation>{observation}</observation>"

)

return log

# Logic for converting tools to string to go in prompt

def convert_tools(tools):

return "\n".join([f"{tool.name}: {tool.description}" for tool in tools])

agent = (

{

"input": lambda x: x["input"],

"agent_scratchpad": lambda x: convert_intermediate_steps(

x["intermediate_steps"]

),

}

| prompt.partial(tools=convert_tools(tool_list))

| model.bind(stop=["</tool_input>", "</final_answer>"])

| XMLAgentOutputParser()

)

agent_executor = AgentExecutor(agent=agent, tools=tool_list)

message = agent_executor.invoke({"input": "whats the weather in New york?"})

print(f"message: {message}")

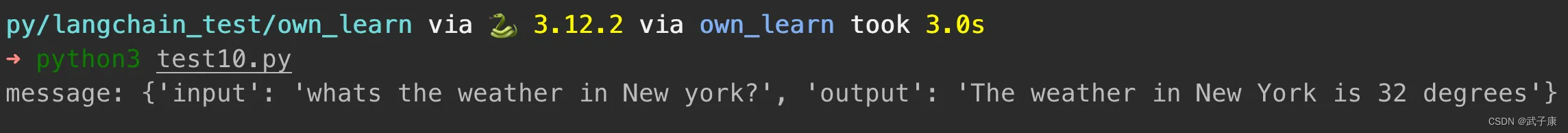

运行结果

➜ python3 test10.py

message: {'input': 'whats the weather in New york?', 'output': 'The weather in New York is 32 degrees'}