环境准备

基础环境

| 系统名称 | 操作系统 | CPU | 内存 | 硬盘 | Kubernete 版本 | Docker版本 | IP |

|---|---|---|---|---|---|---|---|

| master | Centos7 | 4c | 4g | sdb 20G | 1.17.0 | 23.0.1 | 192.168.1.128 |

| node01 | Centos7 | 4c | 4g | sdb 20G | 1.17.0 | 23.0.1 | 192.168.1.129 |

| node02 | Centos7 | 4c | 4g | sdb 20G | 1.17.0 | 23.0.1 | 192.168.1.130 |

| node03 | Centos7 | 4c | 4g | sdb 20G | 1.17.0 | 23.0.1 | 192.168.1.131 |

所安装rook/ceph版本:

ceph:v15.2.11 / rook: 1.6.3

硬盘创建

rook的版本大于1.3,无法使用目录创建集群,要使用单独的裸盘进行创建,也就是创建一个新的磁盘,挂载到宿主机,不进行格式化,直接使用即可

sda

├─sda1 xfs 2a65c467-96ee-406c-b275-2b299f95e3c7 /boot

├─sda2 LVM2_member jfvy2n-75dR-P0q4-pVAq-Q64a-Rud3-E1Yf7o

│ └─centos-root xfs 7aae42ae-f917-43c4-8356-93eaf9e5538d /

└─sda3

sdb

直接添加一个sdb硬盘,不需要格式化

lvm2 安装

#确认安装lvm2

yum install lvm2 -y

#启用rbd模块

modprobe rbd

cat > /etc/rc.sysinit << EOF

#!/bin/bash

for file in /etc/sysconfig/modules/*.modules

do

[ -x \$file ] && \$file

done

EOF

cat > /etc/sysconfig/modules/rbd.modules << EOF

modprobe rbd

EOF

chmod 755 /etc/sysconfig/modules/rbd.modules

lsmod |grep rbd

下载Rook

git clone --single-branch --branch v1.6.3 https://github.com/rook/rook.git

Rook operator.yaml 配置修改

cd rook/cluster/examples/kubernetes/ceph

vim operator.yaml

修改Rook CSI镜像地址,原本的地址可能是gcr的镜像,但是gcr的镜像无法被国内访问,所以需要同步gcr的镜像到阿里云镜像仓库

ROOK_CSI_REGISTRAR_IMAGE: "registry.cn-beijing.aliyuncs.com/dotbalo/csi-node-driver-registrar:v2.0.1"

ROOK_CSI_RESIZER_IMAGE: "registry.cn-beijing.aliyuncs.com/dotbalo/csi-resizer:v1.0.1"

ROOK_CSI_PROVISIONER_IMAGE: "registry.cn-beijing.aliyuncs.com/dotbalo/csi-provisioner:v2.0.4"

ROOK_CSI_SNAPSHOTTER_IMAGE: "registry.cn-beijing.aliyuncs.com/dotbalo/csi-snapshotter:v4.0.0"

ROOK_CSI_ATTACHER_IMAGE: "registry.cn-beijing.aliyuncs.com/dotbalo/csi-attacher:v3.0.2"

Rook cluster.yaml 配置修改

vim cluster.yaml

配置osd节点

第一次修改

# 改为false,并非使用所有节点所有磁盘作为osd

useAllNodes: false

useAllDevices: false

第二处修改

nodes:

- name: "node01"

deviceFilter: "sdb"

- name: "node02"

deviceFilter: "sdb"

- name: "node03"

deviceFilter: "sdb"

部署系统

部署ROOK

cd cluster/examples/kubernetes/ceph

kubectl create -f crds.yaml -f common.yaml -f operator.yaml

等待容器启动,只有都running才能进行下一步

rook-ceph-operator-7d95477f88-fs8ck 1/1 Running 0 92m

rook-discover-6r9ld 1/1 Running 0 92m

rook-discover-86d88 1/1 Running 0 92m

rook-discover-mwxx6 1/1 Running 0 92m

创建ceph集群

kubectl create -f cluster.yaml

创建完成后,可以查看pod的状态:

[root@master ceph]# kubectl -n rook-ceph get pod

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-26rpm 3/3 Running 0 93m

csi-cephfsplugin-jhpk6 3/3 Running 0 93m

csi-cephfsplugin-kmxb4 3/3 Running 0 93m

csi-cephfsplugin-provisioner-5dd4c579b5-cxzm5 6/6 Running 0 93m

csi-cephfsplugin-provisioner-5dd4c579b5-ljs68 6/6 Running 0 93m

csi-rbdplugin-hlvtn 3/3 Running 0 93m

csi-rbdplugin-jzs8p 3/3 Running 0 93m

csi-rbdplugin-nnf9g 3/3 Running 0 93m

csi-rbdplugin-provisioner-7d4bcdf9d6-h62x4 6/6 Running 0 93m

csi-rbdplugin-provisioner-7d4bcdf9d6-s5twx 6/6 Running 0 93m

rook-ceph-crashcollector-node01-676f5f55d8-fzfdx 1/1 Running 0 92m

rook-ceph-crashcollector-node02-54f576478c-qn2j8 1/1 Running 0 91m

rook-ceph-crashcollector-node03-655547d9c4-nlqhd 1/1 Running 0 92m

rook-ceph-mgr-a-77c8c67445-w4z2h 1/1 Running 1 92m

rook-ceph-mon-a-68f87b6bb9-twcm5 1/1 Running 0 93m

rook-ceph-mon-b-77bcd7474d-r5cw6 1/1 Running 0 93m

rook-ceph-mon-c-66847558c6-6dx8r 1/1 Running 0 92m

rook-ceph-operator-7d95477f88-fs8ck 1/1 Running 0 94m

rook-ceph-osd-0-7885684746-lhgkr 1/1 Running 0 92m

rook-ceph-osd-1-9f75d7555-b25p2 1/1 Running 0 91m

rook-ceph-osd-2-58ff6c6c58-kcbjr 1/1 Running 7 90m

rook-ceph-osd-prepare-node01-shdt2 0/1 Completed 0 65m

rook-ceph-osd-prepare-node02-774pm 0/1 Completed 0 65m

rook-ceph-osd-prepare-node03-8mh4j 0/1 Completed 0 65m

rook-ceph-tools-5f666999d8-dhwsw 1/1 Running 0 87m

rook-discover-6r9ld 1/1 Running 0 94m

rook-discover-86d88 1/1 Running 0 94m

rook-discover-mwxx6 1/1 Running 0 94m

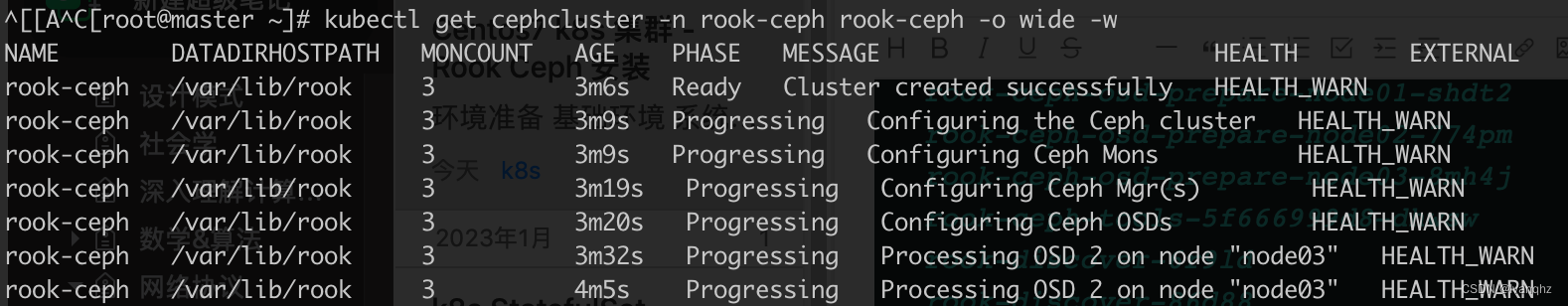

查看集群部署进度

- 实时查看pod创建进度

kubectl get pod -n rook-ceph -w

- 实时查看集群创建进度

kubectl get cephcluster -n rook-ceph rook-ceph -w

- 详细描述

kubectl describe cephcluster -n rook-ceph rook-ceph

待osd-x的容器启动,表示安装成功

安装ceph 客户端工具

Ceph

kubectl create -f toolbox.yaml -n rook-ceph

待容器Running后,即可执行相关命令

[root@rook-ceph-tools-5f666999d8-dhwsw /]# ceph status

cluster:

id: 8ff792cf-570f-4fc7-8300-682d82bc79a9

health: HEALTH_WARN

mons are allowing insecure global_id reclaim

clock skew detected on mon.c

93 slow ops, oldest one blocked for 503 sec, mon.c has slow ops

services:

mon: 3 daemons, quorum a,b,c (age 94m)

mgr: a(active, since 92m)

osd: 3 osds: 3 up (since 15m), 3 in (since 78m)

data:

pools: 1 pools, 1 pgs

objects: 0 objects, 0 B

usage: 3.0 GiB used, 57 GiB / 60 GiB avail

pgs: 1 active+clean

删除ROOK-CEPH

删除Operator 和相关的资源

kubectl delete -f operator.yaml

kubectl delete -f common.yaml

kubectl delete -f crds.yaml

kubectl delete -f cluster.yaml

删除节点机器上的数据

rm -rf /var/lib/rook

擦除节点机器硬盘上的数据

/dev/mapper/ceph-*

dmsetup ls

dmsetup remove_all

dd if=/dev/zero of=/dev/sdb bs=512k count=1

wipefs -af /dev/sdb

参考资料

- kubernetes上的分布式存储集群搭建(Rook/ceph)

- Kubernetes上使用Rook部署Ceph系统并提供PV服务

- Kubernetes 集群分布式存储插件 Rook Ceph部署

- k8s集群中安装rook-ceph

- k8s集群中部署rook+ceph云原生存储