目录:

一、Spark Streaming是什么

二、Spark Streaming的A Quick Example

三、Discretized Streams (DStreams)

四、Dstream时间窗口

五、Dstream操作

六、Spark Streaming优化和特性

一、Spark Streaming是什么

Spark Streaming is an extension of the core Spark API that enables scalable, high-throughput, fault-tolerant stream processing of live data streams. Data can be ingested from many sources like Kafka, Flume, Twitter, ZeroMQ, Kinesis, or TCP sockets, and can be processed using complex algorithms expressed with high-level functions like map, reduce, join and window. Finally, processed data can be pushed out to filesystems, databases, and live dashboards. In fact, you can apply Spark’s machine learning and graph processing algorithms on data streams.

二、Spark Streaming的A Quick Example

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

<version>2.1.0</version>

</dependency>

三、Discretized Streams (DStreams)

提前参考:SparkStreaming在启动执行步鄹和DStream的理解

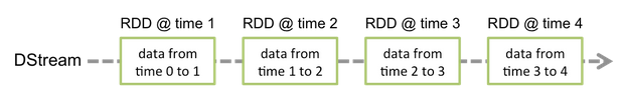

Discretized Stream or DStream is the basic abstraction provided by Spark Streaming.A DStream is represented by a continuous series of RDDs

备注:Dstream就是一个基础抽象的管道,每一个Duration就是一个RDD

四、Dstream时间窗口

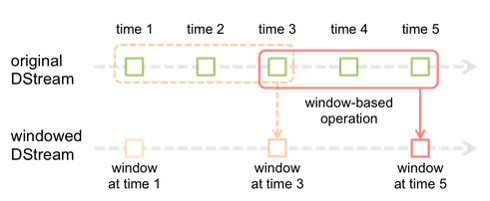

Spark Streaming also provides windowed computations, which allow you to apply transformations over a sliding window of data. The following figure illustrates this sliding window.

使用Spark Streaming每次只能消费当前批次内的数据,当然可以通过window操作,消费过去一段时间(多个批次)内的数据。举个简例子,需要每隔10秒,统计当前小时的PV和UV,在数据量特别大的情况下,使用window操作并不是很好的选择,通常是借助其它如Redis、HBase等完成数据统计。

备注:时间窗口的时间一定是每个Duration产生成RDD的倍数

五、Dstream操作

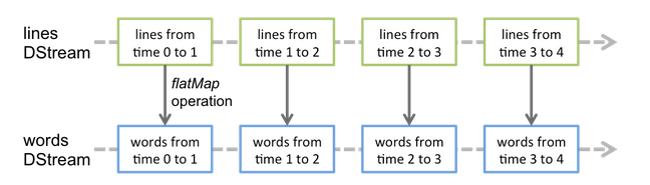

转换操作 Transformations on Dstreams

基于窗口的转换操作 Window Operations

输出操作 Output Operations on DStreams

六、Spark Streaming优化和特性

运行时间优化

1、增加并行度

2、减少数据序列化、反序列化的负担

3、设置合理的批处理间隔

4、减少因任务提交和分发带来的负担

内存使用优化

1、控制批处理间隔内的数据量

2、及时清理不在使用的数据

3、观察及适当调整GC策略

测试例子路径

spark1.6.2\spark-1.6.2-bin-hadoop2.6\examples\src\main\scala\org\apache\spark\examples\streaming

北京小辉微信公众号

大数据资料分享请关注