一、前言

前面两篇文章我已经把requests基础与高阶篇都做了详细讲解,也有不少了例子。那么本篇在基于前两篇文章之上,专门做一篇实战篇。

环境:jupyter

如果你不会使用jupyter请看我这一篇文章:jupyter安装教程与使用教程

二、实战

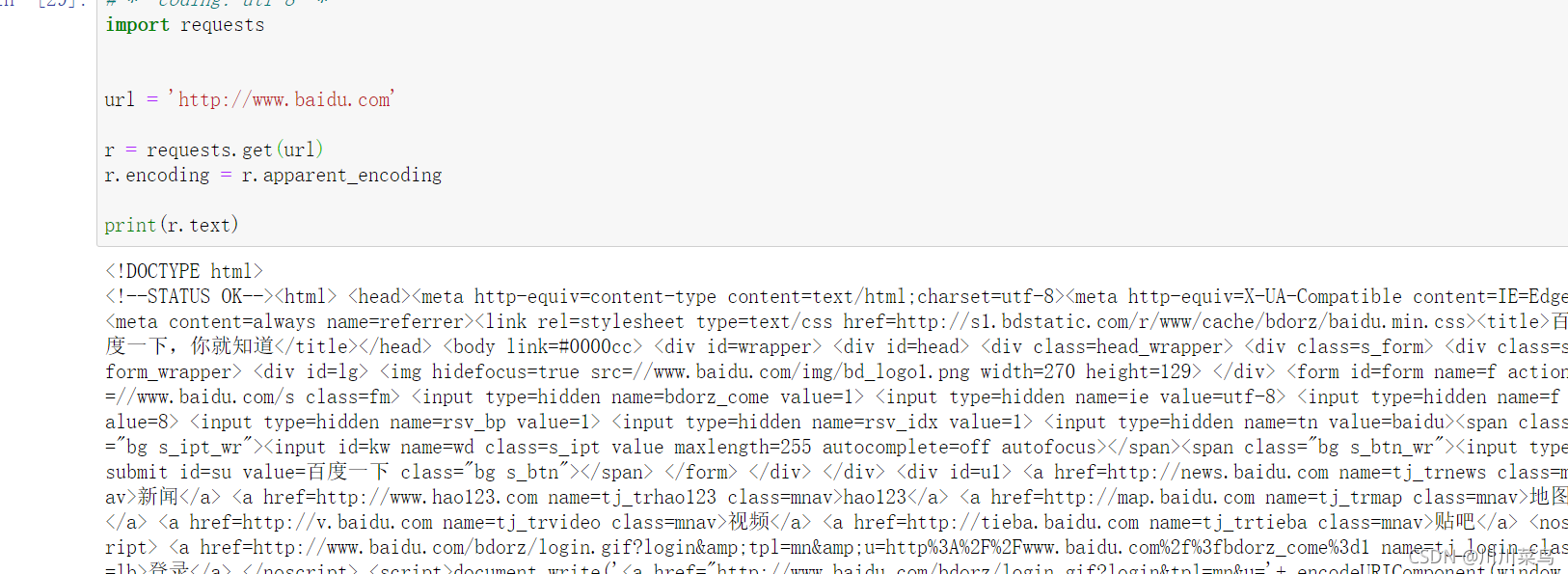

1)获取百度网页并打印

#-*- coding: utf-8 -*

import requests

url = 'http://www.baidu.com'

r = requests.get(url)

r.encoding = r.apparent_encoding

print(r.text)

运行:

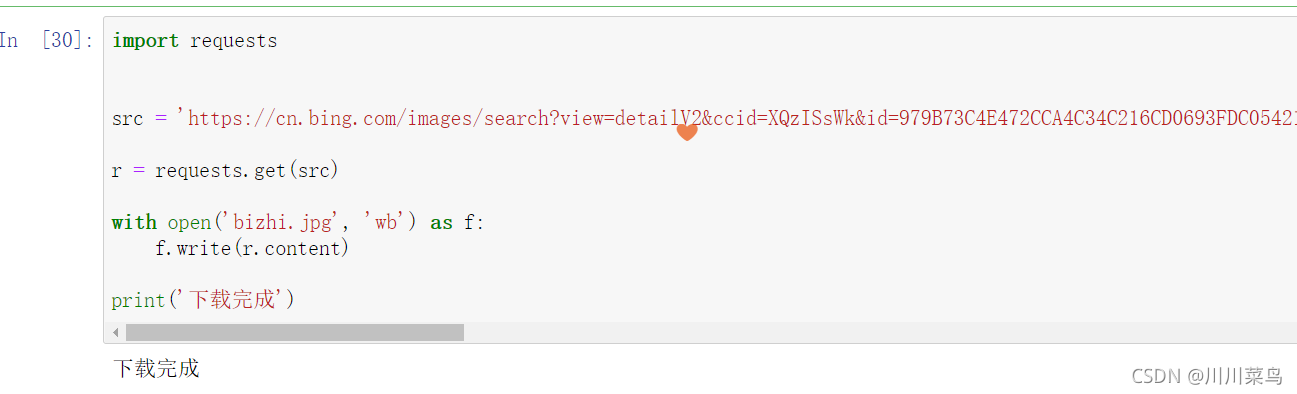

2)获取帅哥图片并下载到本地

比如我们得到链接:张杰图片链接

我现在想把这张图片下载下来:

import requests

src = 'https://cn.bing.com/images/search?view=detailV2&ccid=XQzISsWk&id=979B73C4E472CCA4C34C216CD0693FDC05421E1E&thid=OIP.XQzISsWklI6N2WY4wwyZSwHaHa&mediaurl=https%3A%2F%2Ftse1-mm.cn.bing.net%2Fth%2Fid%2FR-C.5d0cc84ac5a4948e8dd96638c30c994b%3Frik%3DHh5CBdw%252fadBsIQ%26riu%3Dhttp%253a%252f%252fp2.music.126.net%252fPFVNR3tU9DCiIY71NdUDcQ%253d%253d%252f109951165334518246.jpg%26ehk%3Do08VEDcuKybQIPsOGrNpQ2glID%252fIiEV7cw%252bFo%252fzopiM%253d%26risl%3D1%26pid%3DImgRaw%26r%3D0&exph=1410&expw=1410&q=%e5%bc%a0%e6%9d%b0&simid=608020541519853506&form=IRPRST&ck=68F7B9052016D84898D3E330A6F4BC38&selectedindex=2&ajaxhist=0&ajaxserp=0&vt=0&sim=11'

r = requests.get(src)

with open('bizhi.jpg', 'wb') as f:

f.write(r.content)

print('下载完成')

运行:

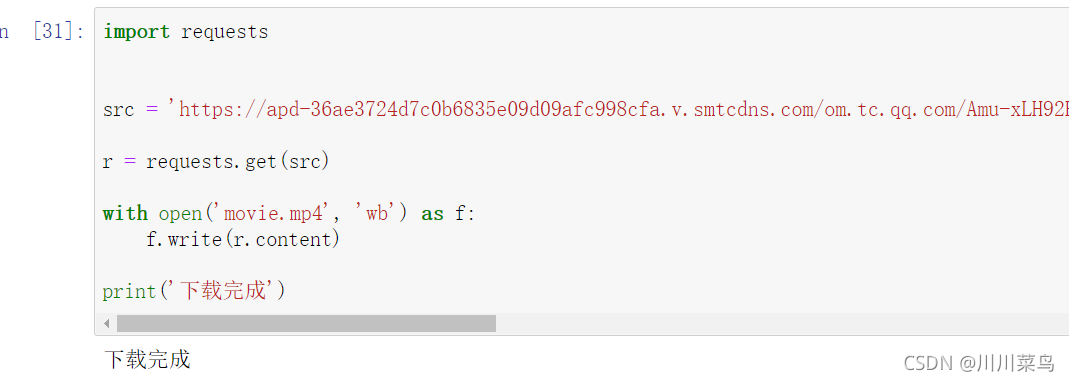

4) 获取美女视频并下载到本地

比如我得到一个视频链接为:jk美女摇摆摇摆 代码:

import requests

src = 'https://apd-36ae3724d7c0b6835e09d09afc998cfa.v.smtcdns.com/om.tc.qq.com/Amu-xLH92Fdz3C-7PsjutQi_lMKUzCnkiicBjZ69cAqk/uwMROfz2r55oIaQXGdGnC2dePkfe0TtOFg8QaGVhJ2MPCPEj/svp_50001/szg_9711_50001_0bf26aabmaaaqyaarhnfdnqfd4gdc3yaafsa.f632.mp4?sdtfrom=v1010&guid=442bebcff8ab31b452c4a64140cd7f3a&vkey=762D67379522C89E3A76A0759F02B2905311E3FE385B3EC351571A1F2D5A0A6A58D5744F68F9C668211191507472C84F4D2B7D147B7F1BB833B04D6E0CC3945CA361CF9E63E01C277F08CD3D69B288562D33EB7EB83861585CB549B2D4EE38E50CA732275EE0B5ECD680378B2DBEBB0DBEE5B100998B1A83694140CF8588CABD91EB22B4369D5940'

r = requests.get(src)

with open('movie.mp4', 'wb') as f:

f.write(r.content)

print('下载完成')

运行:(下载成功,自行点开视频查看,不演示)

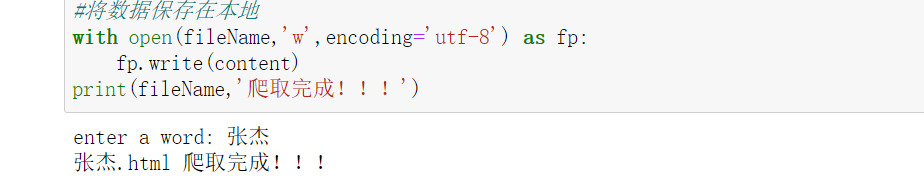

5)搜狗关键词搜索爬取

代码:

import requests

#指定url

url='https://www.sogou.com/web'

kw=input('enter a word: ')

header={

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.131 Safari/537.36'

}

param={

'query':kw

}

#发起请求,做好伪装

response=requests.get(url=url,params=param,headers=header)

#获取相应数据

content=response.text

fileName=kw+'.html'

#将数据保存在本地

with open(fileName,'w',encoding='utf-8') as fp:

fp.write(content)

print(fileName,'爬取完成!!!')

运行输入:张杰,回车

点开下载好的html如下:

6)爬取百度翻译

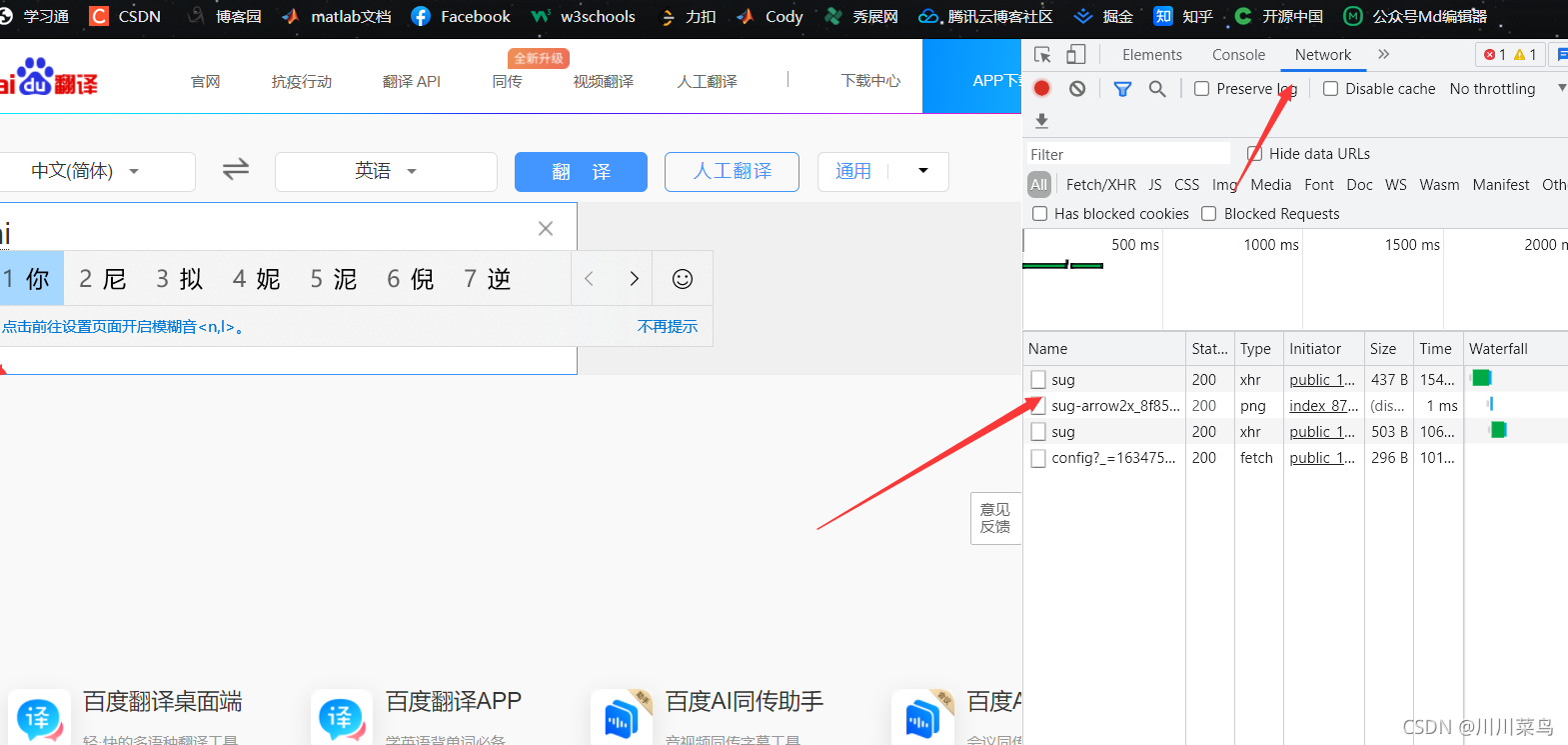

分析找到接口:

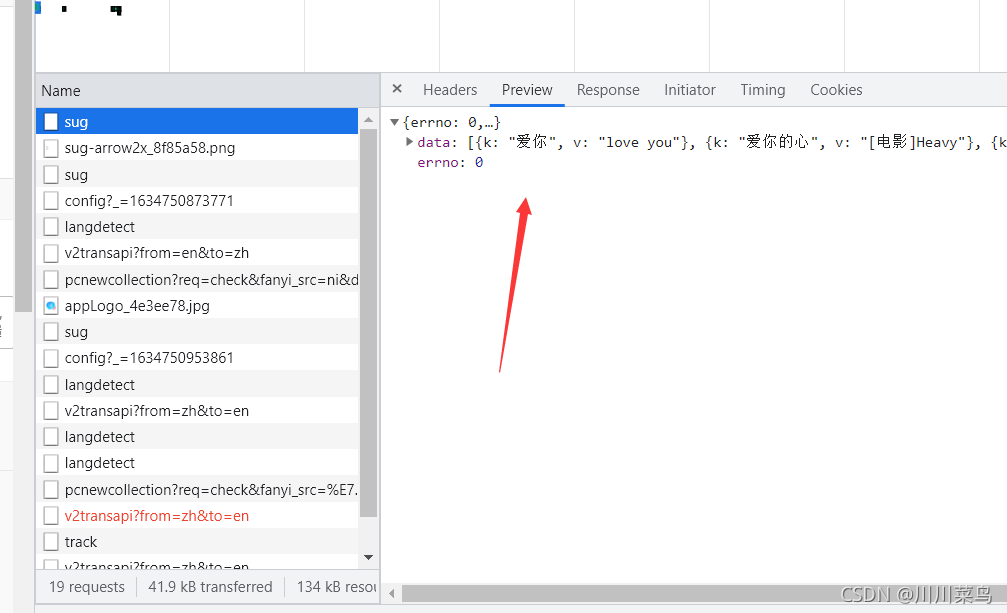

点开:

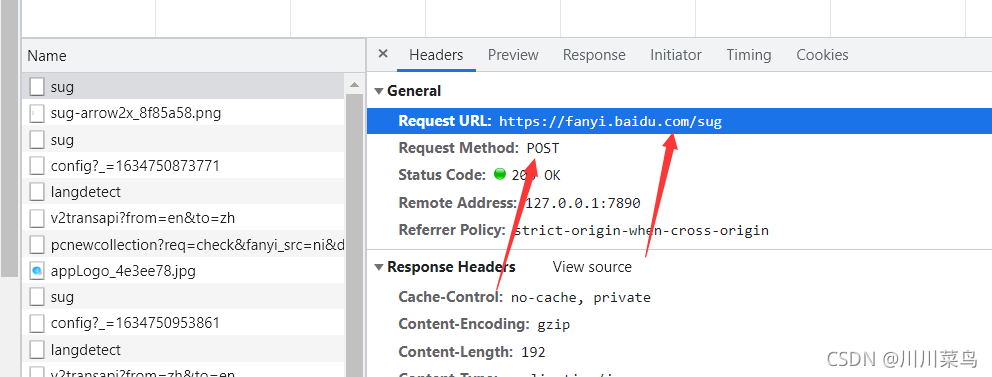

由此我们可以拿到接口和请求方式:

代码为:

import json

import requests

url='https://fanyi.baidu.com/sug'

word=input('请输入想翻译的词语或句子:')

data={

'kw':word

}

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/47.0.2626.106 Safari/537.36'

}

reponse=requests.post(url=url,data=data,headers=headers)

dic_obj=reponse.json()

# print(dic_obj)

filename=word+'.json'

with open(filename,'w',encoding='utf-8') as fp:

json.dump(dic_obj,fp=fp,ensure_ascii=False)

j=dic_obj['data'][1]['v']

print(j)

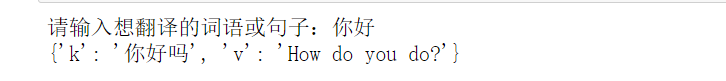

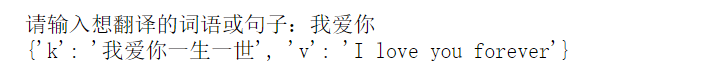

测试一:

测试二:

7)爬取豆瓣电影榜单

目标网址:

https://movie.douban.com/chart

代码:

import json

import requests

url='https://movie.douban.com/j/chart/top_list?'

params={

'type': '11',

'interval_id': '100:90',

'action': '',

'start': '0',

'limit': '20',

}

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/47.0.2626.106 Safari/537.36'

}

reponse=requests.get(url=url,params=params,headers=headers)

dic_obj=reponse.json()

print(dic_obj)

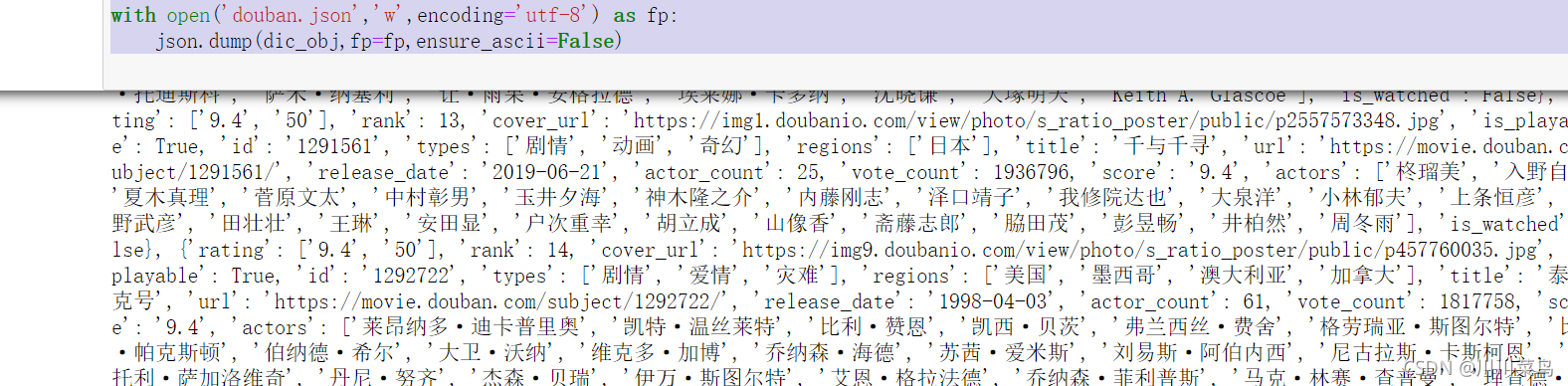

with open('douban.json','w',encoding='utf-8') as fp:

json.dump(dic_obj,fp=fp,ensure_ascii=False)

运行:(同时保存为json)

8)JK妹子爬取

import requests

import re

import urllib.request

import time

import os

header={

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_13_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.162 Safari/537.36'

}

url='https://cn.bing.com/images/async?q=jk%E5%88%B6%E6%9C%8D%E5%A5%B3%E7%94%9F%E5%A4%B4%E5%83%8F&first=118&count=35&relp=35&cw=1177&ch=705&tsc=ImageBasicHover&datsrc=I&layout=RowBased&mmasync=1&SFX=4'

request=requests.get(url=url,headers=header)

c=request.text

pattern=re.compile(

r'<div class="imgpt".*?<div class="img_cont hoff">.*?src="(.*?)".*?</div>',re.S

)

items = re.findall(pattern, c)

# print(items)

os.makedirs('photo',exist_ok=True)

for a in items:

print(a)

for a in items:

print("下载图片:"+a)

b=a.split('/')[-1]

urllib.request.urlretrieve(a,'photo/'+str(int(time.time()))+'.jpg')

print(a+'.jpg')

time.sleep(2)

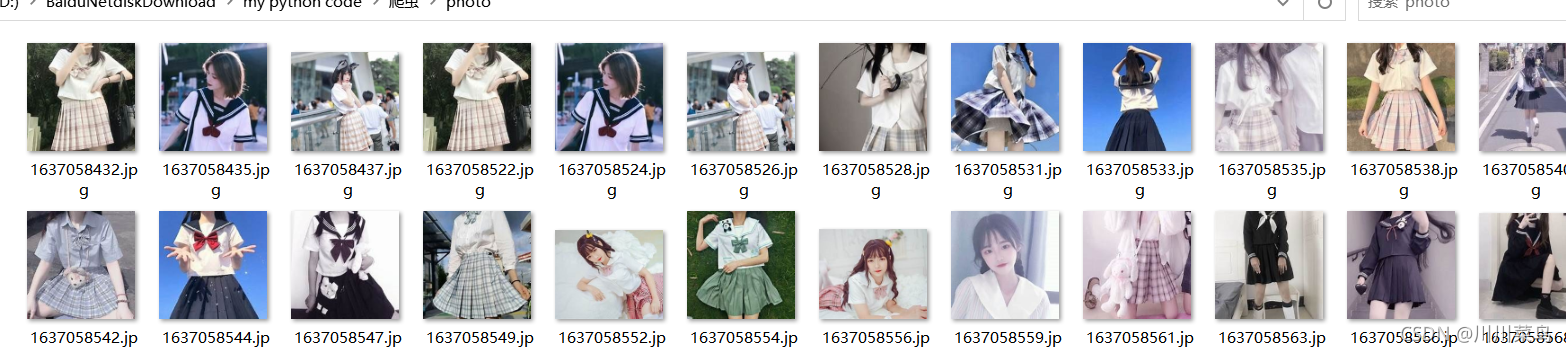

效果:

备注一下:urllib内容我没讲,留在下次讲,我觉得还是有必要讲解一下。然后:正则我已经讲过了,你看不懂re正则赶紧去看看正则教程。

声明: 请你务必要自己分析这些网站,再参考我代码怎么写的,不说你要马上能写,至少你要看得懂我的每一行代码。再或者,你自己写代码完成对应的爬取,那就再好不过了,记得提交任务。